Marth's reverse Dolphin Slash (up-B) is an important technique which people tell you to learn how to do. They're right. But I tried to do it, and I couldn't. There are a couple key things I figured out that really helped. I want to share them.

The inputs are simple. You do up-B, and then during the startup frames (a very small time window), you press left (if you were facing to the right). This press to the left has to be done very fast. I won't discuss why this technique is useful, other people have done that. I just want to talk about how to do it.

Also, just to be clear, you can face right and hold up-left, and then hit B, and you will do the Dolphin Slash behind you and turn around. None of the information I've read is really clear about this, but I'm pretty sure reverse Dolphin Slash is different and requires doing it the hard way of up-B first and then press behind you second, separately.

At first I thought the problem is that my hands are slow. I'll just try it more and try to do it super fast, and then hopefully I'll get it. Well, I didn't get it. I went in Training Mode and tried in slow motion to make sure I was doing the inputs right. It worked. But at regular speed I was hopeless.

Then one day, I had a thought. You know what would save time? Don't push the dstick all the way up.

So I tried doing up-B, all by itself, without pressing the dstick all the way. And I found you only have to press it a tiny bit further than for up-tilt, but really not very far. Only a fraction of the way up is far enough.

The main reason I couldn't do it is because I was pressing the dstick all the way up, then pressing it to the side. And that takes too long. Maybe if you play in tournaments and you're really good, you could press it all the way up and still be fast enough. But I sure can't.

Well, once I had this insight, I was able to do reverse Dolphin Slash successfully about half the time in only 10 minutes of practice.

But I didn't just start doing it. I practiced an intermediate step that I think was a really good idea. If any guide had told me to practice it this way, it would have really helped me.

Press the dstick up half way. Hold it there. Now if you hit B, you will Dolphin Slash. Try it. So now instead of pressing up-B for dolphin slash, you start with half the work done, you just have to press B. Now do this: press B then, almost at the same time, press left (if facing right. press behind you).

When I just tried to hit up-B then left, it was so hard, I couldn't do it. But when I held up and then tried to hit B and left, it was so much easier, I could do it pretty much right away. It's not that hard to do one thing with your right thumb and one with your jump thumb, and do them very close together. Doing two things with your left thumb and something with your right, and coordinating the timing, that's hard. But only one thing with each thumb isn't too hard.

So practice that a bunch and you can learn the timing of when to hit left relative to when to hit B. Without a bunch of stress and failure. You can learn part of the technique by itself without having to be able to do the whole thing.

Once you're good at that, then practice the dstick motion without B. Press it up only a little of the way, definitely not all the way up, and then jam it left hard and quick. And practice it to the right also.

When that feels OK, then try another small step. Press up a little ways, pause for a split second, then press B and left. So it's like doing it with up already pressed, but instead of just holding up and not thinking about it, you do the up press only a moment early, so it isn't totally separate.

Once you can do that, then try to do the whole thing. And because of all the little steps you did, I bet you'll be able to do it sometimes. Not all the time, but sometimes. And once you can do something 5% of the time, then you have a good start and you just practice more and increase that percent. Whereas if you can't do it at all, it's hard to get started and you'll need some easier steps.

So you press up a little ways and B, and then hard left. It won't work every time. You'll get some neutral B (Shield Breaker) and some side B (Sword Dance) at first. But now you should have a good enough idea of how to do it that you can practice until you get it consistent. These little steps to work up to it will get your foot in the door and make the technique approachable.

Again, I'd like you to learn not just how to reverse Dolphin Slash, but also how to approach learning anything that's hard to get started with. This is both a specific example that will help Marth players, but also it's about the method of how to learn.

For part 1 at my blog, click here.

For all parts, and some people's helpful replies, see my thread at Smashboards.

Elliot Temple

| Permalink

| Messages (0)

SSBM Training 1: Marth's SH Double Fair

Super Smash Brothers Melee (SSBM) is hard. And it's hard to get started. I've read a lot of guides and tips. A lot of the info is very helpful. But I think most of it is way too advanced for most players.

I'm not very good at SSBM, but I think most people are probably a lot worse. No offense. I've played games from a young age, I've played a lot of games, I've played a large amount, and I've been very very good at some games. And I started playing Smash before SSBM came out. Not very well, but I've been familiar with Smash for a long time, and followed it much more closely than most fans.

I've been practicing SSBM. Mostly tech skill, alone. I like the game, I like understanding how it works, I like seeing how hard it is and facing a challenge, and I like having a better understanding of what the pros I watch in tournaments are doing, what it's like for them.

I have figured out some ways to practice that are more basic than are usually taught, and I think they could really help people. For example, people say to practice Marth's SH (short hop) double fair (forward air attack). But I can't do that. It's really hard. To some people, it's just the basics. But to me, it's an advanced skill that's going to take a lot of work. My hands have sped up a lot from practice, but I still have a long way to go to SH double fair.

So how do you work your way up? What's in between nothing and SH double fair? My main point in this post is to show you how to break down a technique, like SH double fair, into a bunch of intermediate steps you can practice one by one. Even something pretty simple can be divided into a lot of different things to practice, instead of just being all-or-nothing.

(And for my regular philosophy audience, take note: you can apply similar methods to many other topics outside of gaming. Treat this as a detailed concrete example which illustrates an important philosophical method, and see what you can learn about philosophy.)

- SH

Start with SH alone. To SH, just hit jump and let go fast (before you're in the air). Don't feel bad if you suck at it. I would stand there and hit jump and do nothing else, and Marth would full hop. It took me a ton of practice just to SH. Actually, first I learned to SH Peach, who has an easier one than Marth. Marth is 3 frames, Peach is 4, Fox is 2. Almost all the characters are in one of those three categories. If you have trouble, practice with a 4 frame SH character first. Here's the list of how many frames each character has for short hopping (smaller numbers are harder, meaning you have to let go of jump faster).

One of the cool things I found is, after I practiced Marth's SH a lot, even when I still wasn't very good at it, then when I went back to Peach she became easy. And then once I practiced Sheik's 2 frame SH, and went back to Marth, then Marth felt easier. But you can't move up too early, just starting with Sheik wouldn't have done me any good if I can never get it at all.

- SH While Distracted

As an aside, let me say that being able to stand still and do a SH, and being able to do it while playing the game against an opponent, are different things. As one example, once you can SH ok, try to run forward and SH. You'll miss some because of the distraction. Once you get better at that, try shield stop SHs. That means you dash forward, then very quickly hit shield, then very quickly after that, short hop. Even once I was good at SHing in place, I couldn't do shield stop SHs without some practice. Learning to link together the things you practice makes them harder.

The point is, don't get frustrated if you thought you could SH, but then you try to do SH and something else, and suddenly you can't SH. It's going to happen. It's no big deal, you just need more practice until your ability to SH is less barely and more solid.

- SH Nair

Once you can SH, try to SH Nair (neutral air attack, meaning A with no direction). Hit jump then A. You'll probably miss some SHs from trying to hit A also. Don't worry, practice, you can learn this.

Now to the main point: if you jump and then hit A fast enough, you will land without going into a recovery animation from the nair. The best way to see this is get the 20xx Hack Pack and turn on the flashing red and white for failed and successful L cancels. If you SH nair and you hit A slowly, you will see Marth flash red. If you do it fast enough, Marth will not flash any color.

When I started, I couldn't do this. Marth would flash red. Maybe I could get it 10% of the time. But, again, you practice and you get better. This is a hell of a lot easier than SH double fair. It's a smaller step forward. This will get your hands faster while being a smaller and more achievable goal.

- SH Fair

Next, try to SH fair. If you do this quickly, Marth won't flash red. You have to be a little faster than with SH nair. (If you don't have 20xx hack pack, you'll have to try to watch Marth and visibly see the difference between whether he does his recovery animation from landing during fair, or not. Which is a skill that takes practice. You can learn it early if you have to, but I'd really recommend getting the 20xx pack.)

- SH Uair

Next, try to SH uair (up air attack). Again, you'll have to be a little faster. You'll also have to learn to press the dstick (directional stick, the joystick used for moving) lightly so you don't double jump.

- SH Bair

If you can go even faster, you can do a SH bair (back air) with Marth and land without flashing red. If you do it successfully, Marth will turn around (so this one is easy to tell if you succeeded even without the 20xx Hack Pack).

- C Stick

Then go back through and practice all of these using the cstick (the little yellow joystick) instead of A. (Except not nair, you can't nair with cstick). Again this makes it harder. But it's possible, and with practice your hands will get faster. (As I write this, I can just barely bair with c-stick on a small percentage of attempts. And one really interesting thing I noticed is I can do it a lot easier to the left than the right. After hitting jump, I can press cstick left faster than right. The only reason I can tell the difference is because when doing the SH bairs, that tiny difference actually affected my results because I was so borderline on being able to do it at all. I think that's pretty cool to find that out, and gives me useful information, and potentially something to practice. For example, once I can start to do some SH double fairs with cstick, I'll have to practice to the left first which will be easier so I can have success sooner. And once I can do that a little, I'll have to practice to the right also. Doing it to the left first will be a little easier, another step I can practice before doing it to the right.)

- SH, Fair, Double Jump

Next, try to short hop fair, then as soon as you start the fair, start mashing jump. If you're fast enough, you'll double jump instead of landing. You can also try to learn to press jump at the right timing instead of mashing.

Once you can do that (I can only do it 10% of the time as I write this), try to SH fair with cstick and then get the double jump (I can't do that yet).

- FH Double Fair

Practice doing full hops and then doing fair twice. The point here is to learn the timing for how soon you can do the second fair after the first one. It's not something that's hard, but you do need to practice and learn that timing. Practicing it separately will be helpful. You should also practice other aerials this way just to learn really accurately when you can do a second one. Learning how long your moves last is important and worth practicing for each move individually.

- SH Double Fair

Then, finally, after you progress through all those steps, you can work on SH double fair. That means you do a SH, then you do fair twice before you land. To succeed at this, you need to do the first fair extremely fast after jumping, even faster than any of the things you practiced above. Then you have to do the second fair with good timing as soon as it's possible.

To do a SH double fair correctly, you need to be 6 frames faster than SH, fair, double jump. Fair can hit the opponent on the 4th frame through the 7th frame. Double jump comes out in 1 frame (I think). So suppose you SH, fair, and then you double jump on your last frame in the air. To do a second fair instead, you'd need to be 6 frames faster so you'd have 7 frames of airtime left instead of 1. Then you'd be able to replace the double jump with the second fair and have enough time for it to fully complete the part of the move that can hit the opponent.

The point here isn't just to teach you to SH double fair with Marth. The bigger point is to show you how to practice things step by step and work your way up, a little at a time. Instead of failing to SH double fair over and over, it's better to gradually start with something a lot easier and then keep progressing to slightly harder things. It's a lot more fun to practice when you're learning new things, successfully, as you go along.

Whatever you want to learn, for whatever character, try to figure out a series of small steps that can help you build up to it. Commonly people recommend pressing the buttons slowly at first and then speeding up. That is great advice but there's other ways to practice too.

All the information in this post, I basically had to figure out myself (except the frame data). No one told me to try practicing bairs fast enough I would turn around. But I find it really helpful as an intermediate step. I hope some Marths find this helpful, and also everyone understands the method of creating a gradual progression of small steps to practice. Most melee training information doesn't cover little things this basic, like I never ever heard anyone say "practice doing SH fair fast enough you land without going into recovery from attacking", but I think it's a really useful idea. So hopefully this will encourage a lot of really new players who are struggling. By breaking things down into smaller steps like this, you'll be able to see your progress and succeed one step at a time.

For part 2 at my blog, click here. It provides another example with the same philosophical point.

For all parts beyond 2, and some people's helpful replies, see my thread at Smashboards.

I'm not very good at SSBM, but I think most people are probably a lot worse. No offense. I've played games from a young age, I've played a lot of games, I've played a large amount, and I've been very very good at some games. And I started playing Smash before SSBM came out. Not very well, but I've been familiar with Smash for a long time, and followed it much more closely than most fans.

I've been practicing SSBM. Mostly tech skill, alone. I like the game, I like understanding how it works, I like seeing how hard it is and facing a challenge, and I like having a better understanding of what the pros I watch in tournaments are doing, what it's like for them.

I have figured out some ways to practice that are more basic than are usually taught, and I think they could really help people. For example, people say to practice Marth's SH (short hop) double fair (forward air attack). But I can't do that. It's really hard. To some people, it's just the basics. But to me, it's an advanced skill that's going to take a lot of work. My hands have sped up a lot from practice, but I still have a long way to go to SH double fair.

So how do you work your way up? What's in between nothing and SH double fair? My main point in this post is to show you how to break down a technique, like SH double fair, into a bunch of intermediate steps you can practice one by one. Even something pretty simple can be divided into a lot of different things to practice, instead of just being all-or-nothing.

(And for my regular philosophy audience, take note: you can apply similar methods to many other topics outside of gaming. Treat this as a detailed concrete example which illustrates an important philosophical method, and see what you can learn about philosophy.)

- SH

Start with SH alone. To SH, just hit jump and let go fast (before you're in the air). Don't feel bad if you suck at it. I would stand there and hit jump and do nothing else, and Marth would full hop. It took me a ton of practice just to SH. Actually, first I learned to SH Peach, who has an easier one than Marth. Marth is 3 frames, Peach is 4, Fox is 2. Almost all the characters are in one of those three categories. If you have trouble, practice with a 4 frame SH character first. Here's the list of how many frames each character has for short hopping (smaller numbers are harder, meaning you have to let go of jump faster).

One of the cool things I found is, after I practiced Marth's SH a lot, even when I still wasn't very good at it, then when I went back to Peach she became easy. And then once I practiced Sheik's 2 frame SH, and went back to Marth, then Marth felt easier. But you can't move up too early, just starting with Sheik wouldn't have done me any good if I can never get it at all.

- SH While Distracted

As an aside, let me say that being able to stand still and do a SH, and being able to do it while playing the game against an opponent, are different things. As one example, once you can SH ok, try to run forward and SH. You'll miss some because of the distraction. Once you get better at that, try shield stop SHs. That means you dash forward, then very quickly hit shield, then very quickly after that, short hop. Even once I was good at SHing in place, I couldn't do shield stop SHs without some practice. Learning to link together the things you practice makes them harder.

The point is, don't get frustrated if you thought you could SH, but then you try to do SH and something else, and suddenly you can't SH. It's going to happen. It's no big deal, you just need more practice until your ability to SH is less barely and more solid.

- SH Nair

Once you can SH, try to SH Nair (neutral air attack, meaning A with no direction). Hit jump then A. You'll probably miss some SHs from trying to hit A also. Don't worry, practice, you can learn this.

Now to the main point: if you jump and then hit A fast enough, you will land without going into a recovery animation from the nair. The best way to see this is get the 20xx Hack Pack and turn on the flashing red and white for failed and successful L cancels. If you SH nair and you hit A slowly, you will see Marth flash red. If you do it fast enough, Marth will not flash any color.

When I started, I couldn't do this. Marth would flash red. Maybe I could get it 10% of the time. But, again, you practice and you get better. This is a hell of a lot easier than SH double fair. It's a smaller step forward. This will get your hands faster while being a smaller and more achievable goal.

- SH Fair

Next, try to SH fair. If you do this quickly, Marth won't flash red. You have to be a little faster than with SH nair. (If you don't have 20xx hack pack, you'll have to try to watch Marth and visibly see the difference between whether he does his recovery animation from landing during fair, or not. Which is a skill that takes practice. You can learn it early if you have to, but I'd really recommend getting the 20xx pack.)

- SH Uair

Next, try to SH uair (up air attack). Again, you'll have to be a little faster. You'll also have to learn to press the dstick (directional stick, the joystick used for moving) lightly so you don't double jump.

- SH Bair

If you can go even faster, you can do a SH bair (back air) with Marth and land without flashing red. If you do it successfully, Marth will turn around (so this one is easy to tell if you succeeded even without the 20xx Hack Pack).

- C Stick

Then go back through and practice all of these using the cstick (the little yellow joystick) instead of A. (Except not nair, you can't nair with cstick). Again this makes it harder. But it's possible, and with practice your hands will get faster. (As I write this, I can just barely bair with c-stick on a small percentage of attempts. And one really interesting thing I noticed is I can do it a lot easier to the left than the right. After hitting jump, I can press cstick left faster than right. The only reason I can tell the difference is because when doing the SH bairs, that tiny difference actually affected my results because I was so borderline on being able to do it at all. I think that's pretty cool to find that out, and gives me useful information, and potentially something to practice. For example, once I can start to do some SH double fairs with cstick, I'll have to practice to the left first which will be easier so I can have success sooner. And once I can do that a little, I'll have to practice to the right also. Doing it to the left first will be a little easier, another step I can practice before doing it to the right.)

- SH, Fair, Double Jump

Next, try to short hop fair, then as soon as you start the fair, start mashing jump. If you're fast enough, you'll double jump instead of landing. You can also try to learn to press jump at the right timing instead of mashing.

Once you can do that (I can only do it 10% of the time as I write this), try to SH fair with cstick and then get the double jump (I can't do that yet).

- FH Double Fair

Practice doing full hops and then doing fair twice. The point here is to learn the timing for how soon you can do the second fair after the first one. It's not something that's hard, but you do need to practice and learn that timing. Practicing it separately will be helpful. You should also practice other aerials this way just to learn really accurately when you can do a second one. Learning how long your moves last is important and worth practicing for each move individually.

- SH Double Fair

Then, finally, after you progress through all those steps, you can work on SH double fair. That means you do a SH, then you do fair twice before you land. To succeed at this, you need to do the first fair extremely fast after jumping, even faster than any of the things you practiced above. Then you have to do the second fair with good timing as soon as it's possible.

To do a SH double fair correctly, you need to be 6 frames faster than SH, fair, double jump. Fair can hit the opponent on the 4th frame through the 7th frame. Double jump comes out in 1 frame (I think). So suppose you SH, fair, and then you double jump on your last frame in the air. To do a second fair instead, you'd need to be 6 frames faster so you'd have 7 frames of airtime left instead of 1. Then you'd be able to replace the double jump with the second fair and have enough time for it to fully complete the part of the move that can hit the opponent.

The point here isn't just to teach you to SH double fair with Marth. The bigger point is to show you how to practice things step by step and work your way up, a little at a time. Instead of failing to SH double fair over and over, it's better to gradually start with something a lot easier and then keep progressing to slightly harder things. It's a lot more fun to practice when you're learning new things, successfully, as you go along.

Whatever you want to learn, for whatever character, try to figure out a series of small steps that can help you build up to it. Commonly people recommend pressing the buttons slowly at first and then speeding up. That is great advice but there's other ways to practice too.

All the information in this post, I basically had to figure out myself (except the frame data). No one told me to try practicing bairs fast enough I would turn around. But I find it really helpful as an intermediate step. I hope some Marths find this helpful, and also everyone understands the method of creating a gradual progression of small steps to practice. Most melee training information doesn't cover little things this basic, like I never ever heard anyone say "practice doing SH fair fast enough you land without going into recovery from attacking", but I think it's a really useful idea. So hopefully this will encourage a lot of really new players who are struggling. By breaking things down into smaller steps like this, you'll be able to see your progress and succeed one step at a time.

For part 2 at my blog, click here. It provides another example with the same philosophical point.

For all parts beyond 2, and some people's helpful replies, see my thread at Smashboards.

Elliot Temple

| Permalink

| Message (1)

Israel and Iran

Israeli Prime Minister Benjamin Netanyahu said:

I look forward to Netanyahu's speech and seeing the reactions. Maybe he can talk some sense into America. I really hope so.

Today the Cabinet will be briefed on the security challenges developing around us, first and foremost Iran's attempt to increase its foothold on Israel's borders even as it works to arm itself with nuclear weapons. Alongside Iran's direct guidance of Hezbollah's actions in the north and Hamas' in the south, Iran is trying to also to develop a third front on the Golan Heights via the thousands of Hezbollah fighters who are in southern Syria and over which Iran holds direct command. The fact that Iran is continuing its murderous terrorism that knows no borders and which embraces the region and the world has, to our regret, not prevented the international community from continuing to talk with Iran about a nuclear agreement that will allow it to build the industrial capacity to develop nuclear weapons.This is very important. Obama wants Israel to be destroyed, and is actively pursuing that agenda, and most Americans don't recognize it. And Obama is far from alone in this matter.

... The agreement that is being formulated between Iran and the major powers is dangerous for Israel and therefore I will go to the US next week in order to explain to the American Congress, which could influence the fate of the agreement, why this agreement is dangerous for Israel, the region and the entire world.

I look forward to Netanyahu's speech and seeing the reactions. Maybe he can talk some sense into America. I really hope so.

Elliot Temple

| Permalink

| Messages (0)

Anti-Deviance Strategy

Most statements which are sufficiently deviant (from cultural norms) are assumed to be jokes by default. This is a way of protecting everyone from admitting that serious disagreements exist.

For example, if you say, "Thank you so much, you've persuaded me and I've learned a lot. I will completely rethink all my values and take on board the moral values you've shared with me." that reads as likely sarcasm because it would be much more rational than typical people in our culture.

And if you sound significantly less rational than the typical person, it again doesn't read as serious. For example, "I hate you for trying to use logic to share ideas with me that you think would help me. I'm very mad that you could be so arrogant as to think you could know anything useful to me. Did it ever occur to you that I don't want to think?" People will assume someone doesn't really mean that and is just making a joke, perhaps an exaggerated parody to imply the other guy is wrongly treating him like the person in the parody.

Statements which are reasonably normal are taken at face value, but statements which are deviant are frequently not treated as real statements in the usual way. This is a way of denying the existence of deviance and generally suppressing disagreement and pretending it doesn't exist. It's a strategy which helps people irrationally refuse to consider many disagreements and criticism.

For example, if you say, "Thank you so much, you've persuaded me and I've learned a lot. I will completely rethink all my values and take on board the moral values you've shared with me." that reads as likely sarcasm because it would be much more rational than typical people in our culture.

And if you sound significantly less rational than the typical person, it again doesn't read as serious. For example, "I hate you for trying to use logic to share ideas with me that you think would help me. I'm very mad that you could be so arrogant as to think you could know anything useful to me. Did it ever occur to you that I don't want to think?" People will assume someone doesn't really mean that and is just making a joke, perhaps an exaggerated parody to imply the other guy is wrongly treating him like the person in the parody.

Statements which are reasonably normal are taken at face value, but statements which are deviant are frequently not treated as real statements in the usual way. This is a way of denying the existence of deviance and generally suppressing disagreement and pretending it doesn't exist. It's a strategy which helps people irrationally refuse to consider many disagreements and criticism.

Elliot Temple

| Permalink

| Messages (0)

Comments on Capitalism: A Treatise on Economics by George Reisman. Part 1

Capitalism: A Treatise on Economics is free at this link.

Water isn't just cheap because of mariginal utility (the utility of the 10,000th quart is much lower than of the first quart, lowering demand after prior quarts of water are supplied), it's also because a large supply is cheaply available from nature. If the question is about starting a new business to acquire and sell additional water, than the marginal utility of water is the relevant issue. But if the question is about the cheap pricing of current water, I think prices would be raised (despite that meaning fewer quarts sell) if not for competition. (BTW I'm ignoring issues like government regulations and the real-life water situation, and just treating it as a free market commodity.)

If I'm mistaken about any of this, then I'd still say the passage's explanation is unsatisfactory, because in that case it apparently didn't adequately clarify matters for me.

I agree with Reisman that Atlas Shrugged should have persuaded the whole country in about six weeks. That it didn't is one of the largest and most important unsolved philosophical problems. (Note I'm thinking of this problem broadly. Why didn't Popper's work persuade more people? Mises? Szasz? Deutsch? I consider those the same issue. Atlas Shrugged is the best, but there's a lot of good work which should have persuaded a lot of people but has only had limited success.)

The theory of marginal utility resolved the paradox of value which had been propounded by Adam Smith and which had prevented the classical economists from grounding exchange value in utility. “The things which have the greatest value in use,” Smith observed, “have frequently little or no value in exchange; and on the contrary, those which have the greatest value in exchange have frequently little or no value in use. Nothing is more useful than water: but it will purchase scarce any thing; scarce any thing can be had in exchange for it. A diamond, on the contrary, has scarce any value in use; but a very great quantity of other goods may frequently be had in exchange for it.”I'm not satisfied with the way this passage explains the issue (I don't think I have a disagreement with Reisman about economics here, though). It doesn't mention supply, demand and competition, which I think are crucial. It leaves a reader to wonder: why not charge the value of people's first quart of water? Sure you wouldn't sell the marginal 10,000th quart at that price (they'd find the price higher than the utility), but you could potentially make more profit, with less water inventory, at a higher price. The reason is because other water sellers would compete with you and that keeps prices down, not because of marginal utility. This answer's Smith's question about how something so valuable can be cheap.

The only explanation, the classical economists concluded, is that while things must have utility in order to possess exchange value, the actual determinant of exchange value is cost of production. In contrast, the theory of marginal utility made it possible to ground exchange value in utility after all—by showing that the exchange value of goods such as water and diamonds is determined by their respective marginal utilities. The marginal utility of a good is the utility of the particular quantity of it under consideration, taking into account the quantity of the good one already possesses or has access to. Thus, if all the water one has available in a day is a single quart, so that one’s very life depends on that water, the value of water will be greater than that of diamonds. A traveler carrying a bag of diamonds, who is lost in the middle of the desert, will be willing to exchange his diamonds for a quart of water to save his life. But if, as is usually the case, a person already has access to a thousand or ten thousand gallons of water a day, and it is a question of an additional quart more or less—that is, of a marginal quart—then both the utility and the exchange value of a quart of water will be virtually nothing. Diamonds can be more valuable than water, consistent with utility, whenever, in effect, it is a question of the utility of the first diamond versus that of the ten-thousandth quart of water.

Water isn't just cheap because of mariginal utility (the utility of the 10,000th quart is much lower than of the first quart, lowering demand after prior quarts of water are supplied), it's also because a large supply is cheaply available from nature. If the question is about starting a new business to acquire and sell additional water, than the marginal utility of water is the relevant issue. But if the question is about the cheap pricing of current water, I think prices would be raised (despite that meaning fewer quarts sell) if not for competition. (BTW I'm ignoring issues like government regulations and the real-life water situation, and just treating it as a free market commodity.)

If I'm mistaken about any of this, then I'd still say the passage's explanation is unsatisfactory, because in that case it apparently didn't adequately clarify matters for me.

Very soon thereafter, the whole Circle Bastiat, myself included, met again with Ayn Rand. We were all tremendously enthusiastic over Atlas. Rothbard wrote Ayn Rand a letter, in which, I believe, he compared her to the sun, which one cannot approach too closely. I truly thought that Atlas Shrugged would convert the country—in about six weeks; I could not understand how anyone could read it without being either convinced by what it had to say or else hospitalized by a mental breakdown.That Rothbard letter can be found here. I think it may be Rothbard's most interesting writing.

The following winter, Rothbard, Raico, and I, and, I think, Bob Hessen, all enrolled in the very first lecture course ever delivered on Objectivism. This was before Objectivism even had the name “Objectivism” and was still described simply as “the philosophy of Ayn Rand.” Nevertheless, by the summer of that same year, 1958, tensions had begun to develop between Rothbard and Ayn Rand, which led to a shattering of relationships, including my friendship with him.

I agree with Reisman that Atlas Shrugged should have persuaded the whole country in about six weeks. That it didn't is one of the largest and most important unsolved philosophical problems. (Note I'm thinking of this problem broadly. Why didn't Popper's work persuade more people? Mises? Szasz? Deutsch? I consider those the same issue. Atlas Shrugged is the best, but there's a lot of good work which should have persuaded a lot of people but has only had limited success.)

13. Cf. Murray N. Rothbard, For a New Liberty (New York: Macmillan, 1973). In that book, Rothbard wrote: “Empirically, the most warlike, most interventionist, most imperial government throughout the twentieth century has been the United States” (p. 287; italics in original). In sharpest contrast to the United States, which has supposedly been more warlike even than Nazi Germany, Rothbard described the Soviets in the following terms: “Before World War II, so devoted was Stalin to peace that he failed to make adequate provision against the Nazi attack. . . . Not only was there no Russian expansion whatever apart from the exigencies of defeating Germany, but the Soviet Union time and again leaned over backward to avoid any cold or hot war with the West” (p. 294).I already had a very low opinion of Rothbard. He has a lot of really awful views, such as anti-semitism and children-as-property. I didn't know this specific thing. (Some of his writing about economics is actually pretty decent.)

It is the division of labor which introduces a degree of complexity into economic life that makes necessary the existence of a special science of economics. For the division of labor entails economic phenomena existing on a scale in space and time that makes it impossible to comprehend them by means of personal observation and experience alone. Economic life under a system of division of labor can be comprehended only by means of an organized body of knowledge that proceeds by deductive reasoning from elementary principles. This, of course, is the work of the science of economics. [emphasis mine]I disagree with this epistemology, which thinks you have some foundations and deduce the rest. For info on my epistemology, see my David Deutsch and Karl Popper reading recommendations, and my own writing on my websites.

Elliot Temple

| Permalink

| Messages (0)

Twitter Threading is Terrible

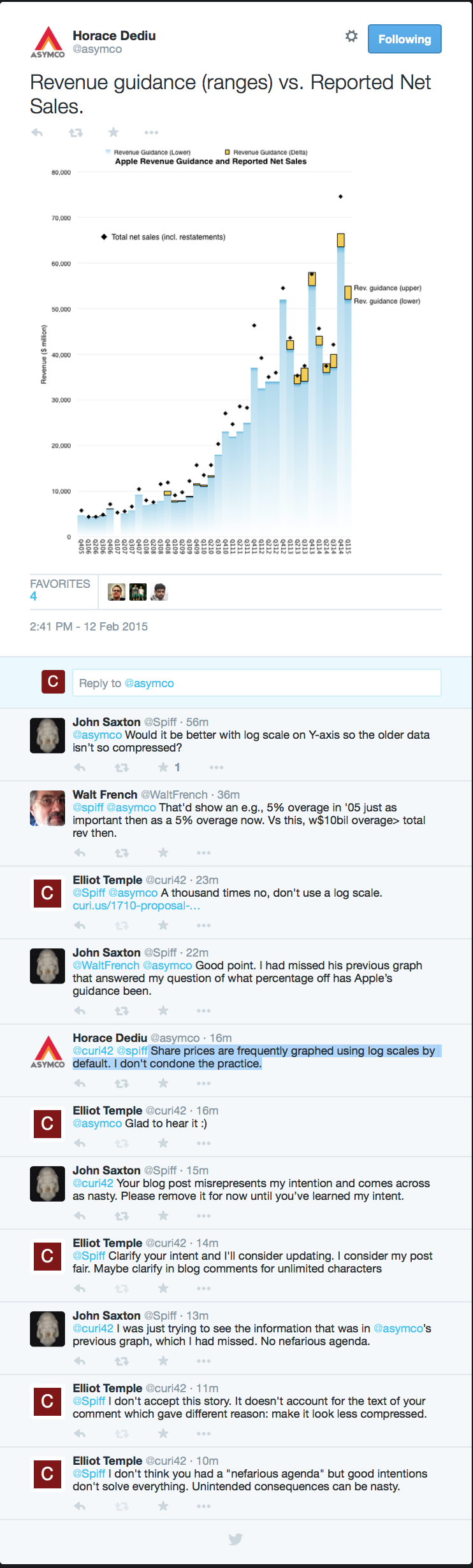

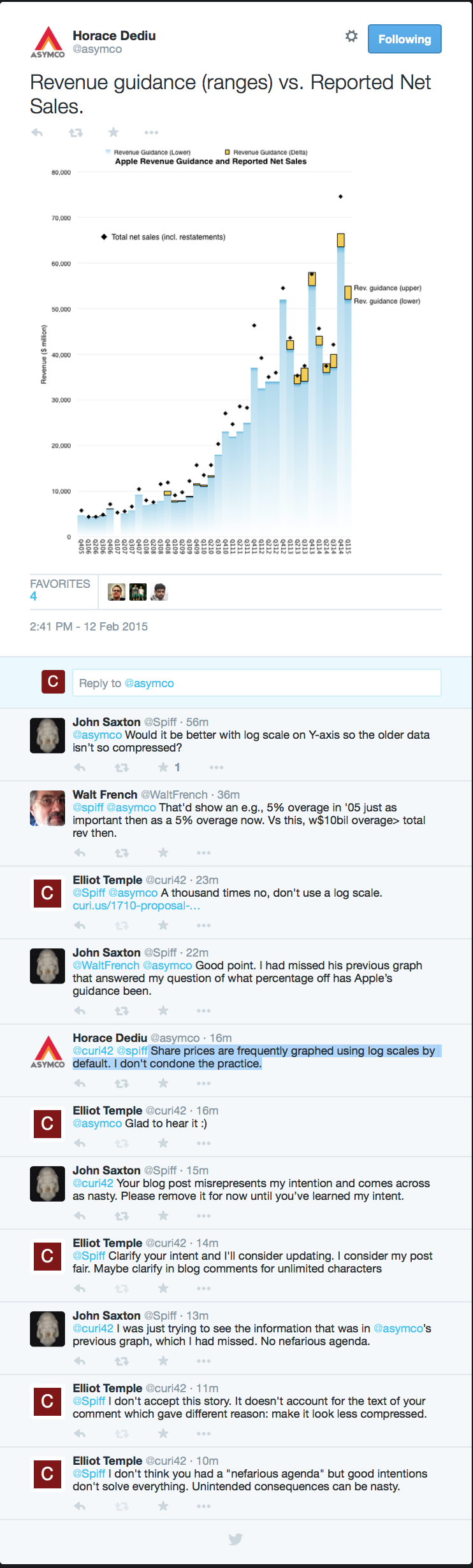

Here is a conversation I had on Twitter, in order. This is fine:

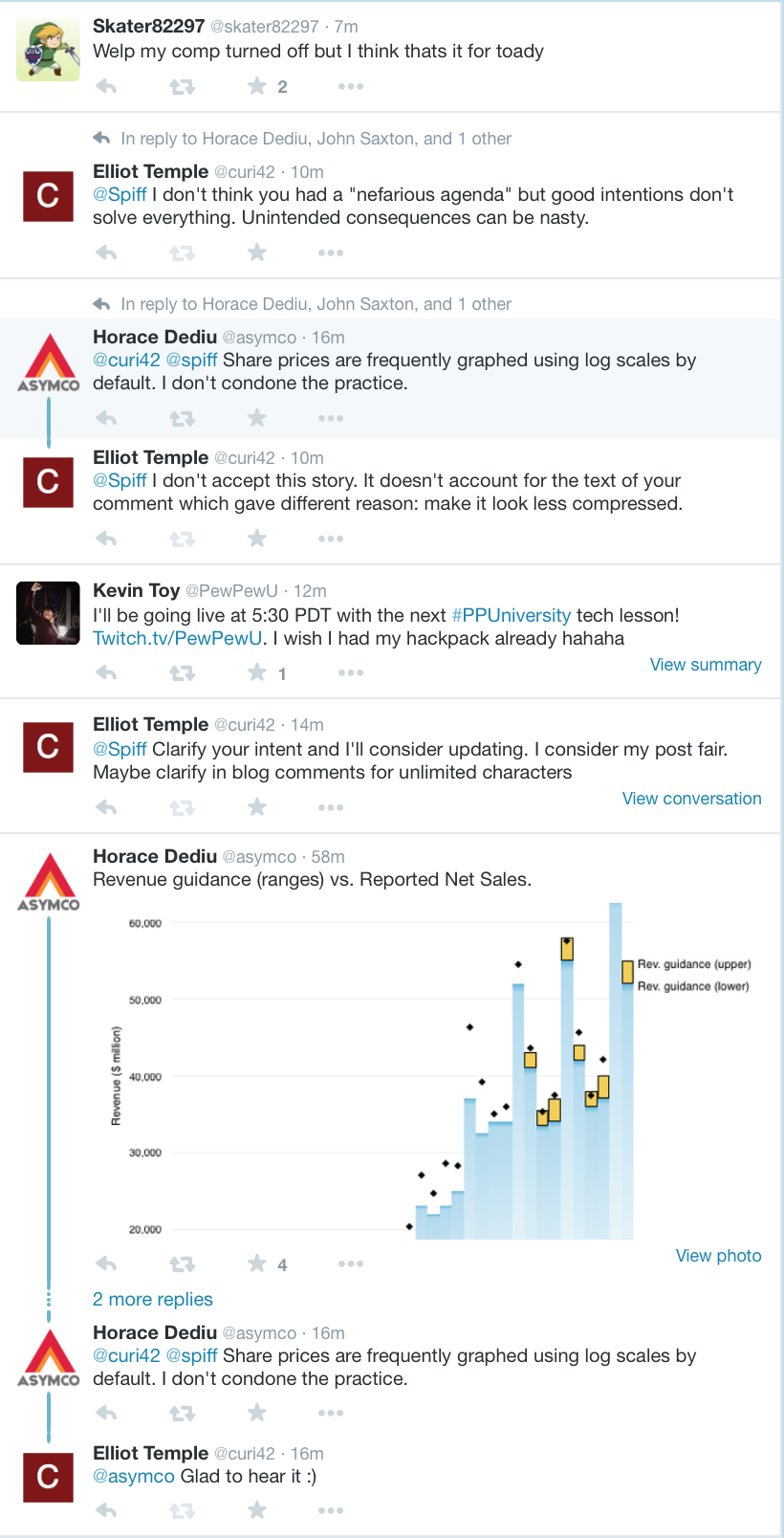

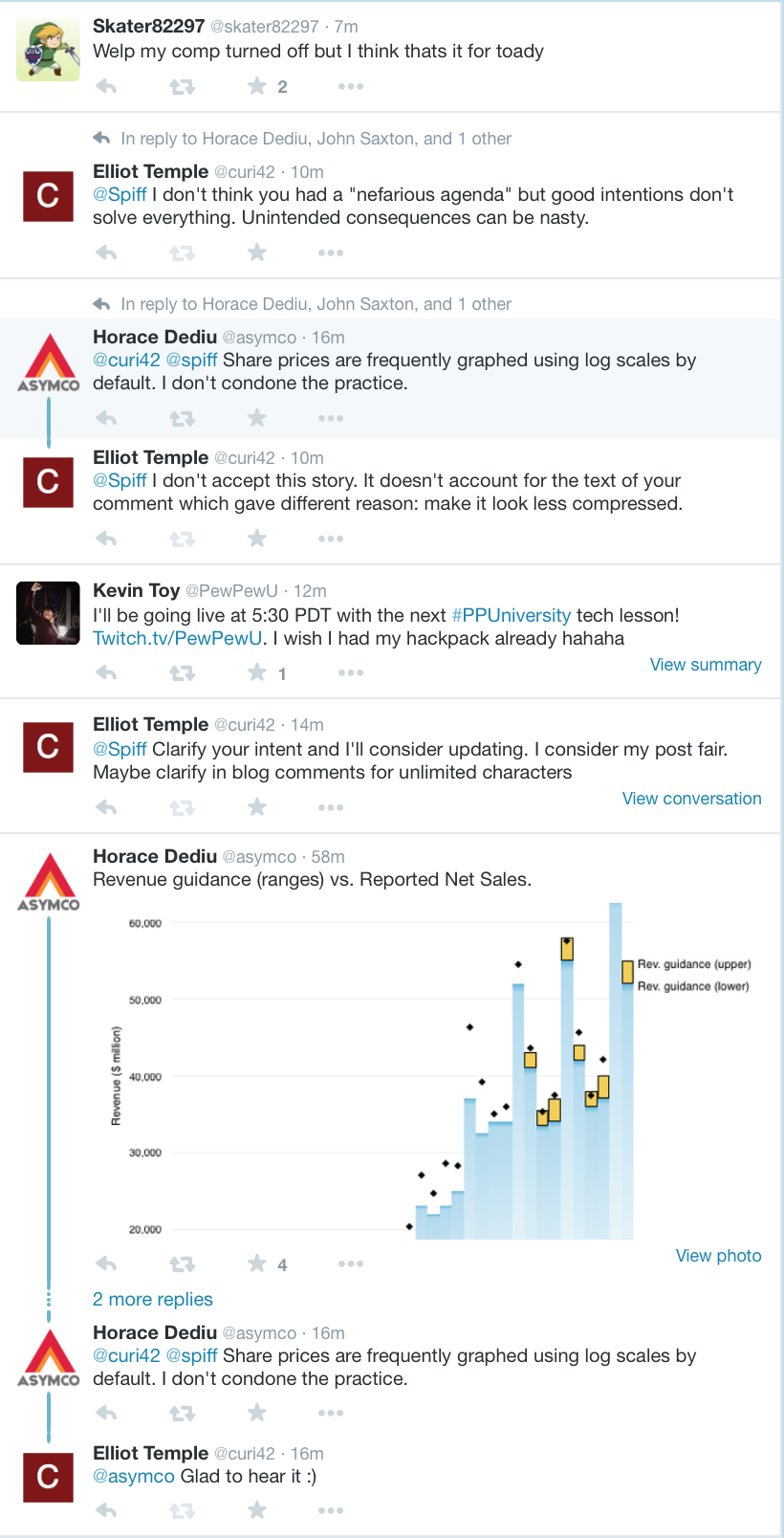

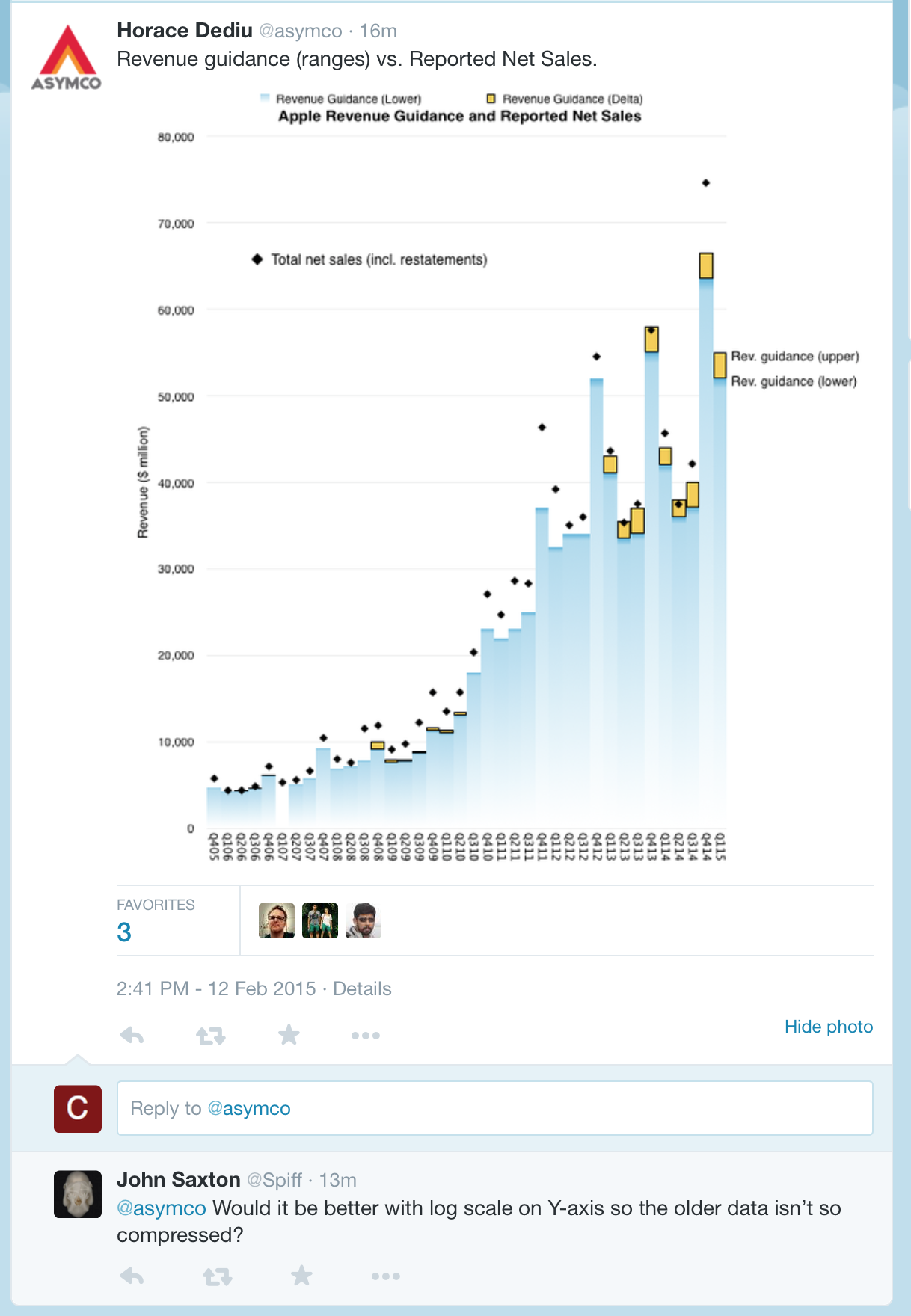

And here's my main Twitter feed. Notice how Twitter has taken the same conversation, shown it as four separate parts, which are out of order and incomplete. And Twitter put things together which don't go together (my reply to @Spiff is linked to @asymco's tweet). It's extremely confusing and terrible.

I've noticed other major problems with Twitter threading too. (Threading is how it puts together multiple tweets into one conversation. An example of an OK method is chronological order, like my first screenshot. Split into parts, out of order, with some messages omitted, is an example of a very bad method.)

Here is an example. Ann Coulter replies to someone talking about a school censoring "this word", and suggests "raghead" instead. But what word was it? I don't know and there's no reasonable way to find out because Twitter's threading is broken: it doesn't show me the prior tweets in the conversation.

And here's my main Twitter feed. Notice how Twitter has taken the same conversation, shown it as four separate parts, which are out of order and incomplete. And Twitter put things together which don't go together (my reply to @Spiff is linked to @asymco's tweet). It's extremely confusing and terrible.

I've noticed other major problems with Twitter threading too. (Threading is how it puts together multiple tweets into one conversation. An example of an OK method is chronological order, like my first screenshot. Split into parts, out of order, with some messages omitted, is an example of a very bad method.)

No Context

Often I view a tweet which replies to something, but when I click through to details I cannot see what it replies to. Ugh... Twitter please get your act together. (Or better yet, someone make a better website and replace Twitter. Thanks in advance.)Here is an example. Ann Coulter replies to someone talking about a school censoring "this word", and suggests "raghead" instead. But what word was it? I don't know and there's no reasonable way to find out because Twitter's threading is broken: it doesn't show me the prior tweets in the conversation.

Elliot Temple

| Permalink

| Messages (0)

Proposal to Alter Graph Misleadingly

The graph @asymco tweeted is normal enough. Focus your attention on the reply from @Spiff.

The reason some of the revenue data looks compressed on the current revenue scale is because it is. Trying to change that is trying to mislead viewers.

What @Spiff proposes is an example of How To Lie With Statistics. He's intentionally trying to make the graph look different than it normally would to meet an agenda of his and give the viewer a different impression.

His tweet may seem innocuous but it's really bad. He's a bad scholar wannabe. Stuff like this is not OK. Bar graphs for typical quantities should use a linear scale, starting at 0, unless there's a damn good reason to do otherwise. If you do something else, the bars are no longer proportional to each other in the intuitive way, so it misleads viewers.

For example, suppose the graph started with revenue at $50,000,000 instead of 0. Then all the bars would be shorter. This would make the smaller bars shorter by a large percentage, and make them look even shorter compared to the big bars. That'd be really bad! When someone looked at it and thought "this bar is twice as big as this other bar", that would no longer be a valid way of reasoning due to not starting at 0.

Or suppose the graph used a log scale like @Spiff proposed. A log scale mean the revenue would be labelled like $0, $1, $10 $100, $1000, instead of like $0, $10 million, $20 million, $30 million, etc... See how misleading that could be? What it means is, again, when someone compares the sizes of the bars he gets the wrong idea. When he thinks, "This bar is about 20% higher than the bar before it", he's being played for a fool.

Don't play people for a fool. Don't try to trick them. Don't have an agenda for how you want your graph to look and then adjust things to achieve it. Just make a simple graph that makes sense and then leave it alone and let it speak for itself. Tinkering with your graph as @Spiff proposes is dishonest (or clueless and still harmful).

Scholarship, please.

Update: @asymco says:

Share prices are frequently graphed using log scales by default. I don't condone the practice.I'm sad to hear how common bad scholarship is. That's terrible. But I'm glad @asymco understands this and does a better job. Thumbs up to him! Here's @asymco's blog which I read regularly.

Update 2: @Spiff now agrees with my point about log scales (I think).

Update 3: Here is an example of a very bad article advocating bad use of log charts. It looks mainstream and has a tone of sharing uncontroversial knowledge.

Elliot Temple

| Permalink

| Messages (0)

Reading Resources

Many people say they are too busy to learn much, or learn well. And don't have time to read books.

These same people typically don't know how to speed read. Why not? If time is the issue, why not learn speed reading and then read more? Because they are lying and the real issue is they don't want to read or learn.

Regardless, I want to discuss some aspects of how speed reading works.

The most powerful form of speed reading is RSVP. It's easier to see what it is than explain – click here. You see 1 to 5 words at a time and the computer updates them quickly.

It's very reasonably achievable to read twice as fast with RSVP compared to regular book reading. So you can read a book in 3 hours instead of 6 hours. You don't have to read many books to pay back the time invested in learning to use RSVP. Actually the time investment is pretty near zero – the best way to learn RSVP is to start using it at speeds only a little faster than your normal reading (and I'd recommend 3-4 words appearing at a time to start with, not 1), and work your way up as you practice. Since you learn while reading, it doesn't cost you any time if you were going to read anyway. It starts saving a little time right away, and a lot more later.

Reading costs resources other than time. For example, if you use RSVP to read twice as many books, and you buy your books from Amazon, you'll have to pay Amazon twice as much money. But books are cheap, I hope you'll forgive this "problem" with RSVP.

Using RSVP software requires e-books. If you have a paper book, you can't read it with RSVP. This is the biggest difficulty with RSVP due to DRM. E-books like Kindle books are easy to get and exist for many, many books. And they are typically cheaper than paper books. The problem is when trying to prevent you from giving copies of the book to your friends (or strangers), they also try to prevent you from reading the book in anything but their official software (which doesn't support RSVP). To read a book with RSVP, you need it without copy protection. So you have to read from a limited pool of free books, or use torrents or book download websites, or use software to remove the DRM. This is not hard, but unfortunately you can't just get started reading any book from Kindle in 5 minutes. It might take you a couple hours to set up DRM removal software, or half an hour to find a book downloading website or get a torrent program.

Buying e-books and removing the DRM so you can read them in your own choice of software is completely reasonable and moral. If you don't distribute the books to others, you aren't doing anything wrong. You pay for the book, and then read your book, that you own, with the software of your choice. That's it. What's wrong with that? Nothing. It also offers the best selection of books available. People should learn how to do this. It isn't very hard.

With RSVP, you get less mentally tired per page read, but more tired per minute of reading.

A rough estimate is that while you're saving 50% for time, you're only saving 25% for mental energy.

Say regular reading finishes 100 words in 100 units of time and uses up 100 units of mental energy. Then RSVP would read 100 words in 50 units of time and use up 75 units of mental energy. This is a good thing.

Certainly not for everyone. But for a lot of people, RSVP would still be very useful in that scenario. People who are low on time would still use RSVP to save time. They'd be effectively trading some mental energy for some time. They'd be converting one resource (mental energy) into another (time). If they are low on time, doing a conversion to save time is useful.

Most people, including most "busy" people, only use a fraction of their mental energy each day anyway. They've got a lot to spare. (Many will lie and say otherwise. The issue is not that they are too mentally fatigued to think more, it's really that they don't like thinking, so they say they are mentally fatigued to excuse their choice not to think much.)

But real RSVP saves both time and mental energy. That makes it such an amazing deal. It'd ridiculous that very few people use it. This is a good example of how people make huge mistakes which makes their quality of life dramatically worse.

The only real reason not to use RSVP is if you don't read. Which would be a big mistake, but for different reasons. Thinking and ideas are important! But I won't go into that here. If you want an explanation, you can read Philosophy: Who Needs It or ask at the Fallible Ideas Email Discussion Group.

One consequence is that if you're using mental energy for other parts of your life, and you add in some reading, this is not a zero sum game. Every bit of mental energy going to reading does not mean less mental energy for other tasks. Maybe two thirds of the mental energy for reading has to be subtracted from other tasks, but one third is a bonus.

Audio book selection is limited, but this can be fixed with text-to-speech software such as Voice Dream Reader. (I use the "Paul" voice.) Text-to-speech software now works extremely well. The only problem is if you buy a book on Kindle with DRM, you can't read it with your own choice of software, like we talked about before. That means no RSVP and also no text-to-speech. Unless you remove the DRM, which isn't that hard.

Some people worry that text-to-speech software is harder to understand than human speech. This may have been true in the past, but it is not true today. Actually, text-to-speech is better and easier to understand at high speeds because it's 100% consistent about pronunciation and pacing. Not every single word is pronounced correctly, but most are, and they are always pronounced the same way, and you can still understand it (and if you're really bothered, you can tinker with it and fix how it pronounces words).

How do audio books compare to RSVP and regular reading? Using the same numbers as before where regular was 100/100/100 and RSVP was 100/50/75, audio book speed reading would be 100 words in 75 time using 40 mental energy (audio books without turning up the speed would be more like 100 words in 150 time using 35 mental energy, there's really no good reason to do that. once you're good at this, if you're tired you can just turn the speed down from 2.5x to 2x or something like that, and it feels very easy, there's no reason to use 1x speed).

Audio books are a great way of reading, even though they are slower than RSVP, because they are less mentally draining and still pretty fast. And it's basically free to learn to listen to audio books at higher speeds: just turn it up a little at a time and you'll learn while reading without any separate practice or training or lessons.

The other great thing about audio books is you can easily mulititask. You can easily listen to an audiobook while walking somewhere, while on public transit, while driving, while exercising, while showering (with speakers instead of headphones), or while eating. It works with some other activities too, such as playing video games, though that can require some skill if it's a hard game. Whereas to multitask an audio book with exercise basically requires no skill, anyone can do it right away.

However, there is a notable form of reading, which is sort of speed reading, which is great. It's called skimming. If you don't need to read every word, don't! This can be a lot faster than even RSVP if you can skip over half the text. And there are plenty of reasons to take a look at something but not read all of it. That's really useful. You might want to see what it is and see if you're interested, but instead of just reading the beginning you skip ahead. A great way to get a sense of a book is to go to a chapter that sounds interesting, read a few paragraphs, skip a few pages, read a few paragraphs, skip a few pages, etc. It's better to adjust what to skip, when, depending what you read though, don't just turn pages thoughtlessly.

RSVP turned up to a really high speed has some similarities to skimming. Both are really fast, and in both cases you miss stuff. What are the main differences? With very fast RSVP, you don't miss any sections of text. Say you're trying to find out if the author addresses a particular counter-argument. He might do that in one paragraph somewhere in the middle of the chapter. If you skim, you could easily miss that paragraph. If you use very high speed RSVP, you'll see every paragraph and you won't miss it. If you're reading too fast you might not understand it, but you'll see the topic and stop and then read that paragraph again.

If you ever want to say something like, "the author doesn't cover topic X" you need to read every sentence, even if very quickly so you don't understand every detail perfectly. But most of the time you don't need to say things like that, and it's no big deal if you miss something, so skimming is great.

I think both skimming and very fast RSVP are underrated. People will try too hard to finish the whole book, or something like that. But a lot of books you can just quickly go through the best parts, look for parts of particular interest to you, etc, and miss a bunch, and you get more value for your time/effort than if you read the whole book.

Books don't have a totally consistent level of quality, and not all the material is equally interesting to you. For most books, the less good parts are not as worthwhile to read for you as just reading one of the better parts from another book (even if that second book is, overall, less good or less interesting to you). Since you aren't going to read all the interesting books – you won't run out – don't hesitate to go through a book quickly. If you can get 75% of the value from the book in 50% of the time, that's absolutely wonderful and you should be thrilled and move on to the next book and do the same thing with it too.

These same people typically don't know how to speed read. Why not? If time is the issue, why not learn speed reading and then read more? Because they are lying and the real issue is they don't want to read or learn.

Regardless, I want to discuss some aspects of how speed reading works.

The most powerful form of speed reading is RSVP. It's easier to see what it is than explain – click here. You see 1 to 5 words at a time and the computer updates them quickly.

It's very reasonably achievable to read twice as fast with RSVP compared to regular book reading. So you can read a book in 3 hours instead of 6 hours. You don't have to read many books to pay back the time invested in learning to use RSVP. Actually the time investment is pretty near zero – the best way to learn RSVP is to start using it at speeds only a little faster than your normal reading (and I'd recommend 3-4 words appearing at a time to start with, not 1), and work your way up as you practice. Since you learn while reading, it doesn't cost you any time if you were going to read anyway. It starts saving a little time right away, and a lot more later.

Reading costs resources other than time. For example, if you use RSVP to read twice as many books, and you buy your books from Amazon, you'll have to pay Amazon twice as much money. But books are cheap, I hope you'll forgive this "problem" with RSVP.

Using RSVP software requires e-books. If you have a paper book, you can't read it with RSVP. This is the biggest difficulty with RSVP due to DRM. E-books like Kindle books are easy to get and exist for many, many books. And they are typically cheaper than paper books. The problem is when trying to prevent you from giving copies of the book to your friends (or strangers), they also try to prevent you from reading the book in anything but their official software (which doesn't support RSVP). To read a book with RSVP, you need it without copy protection. So you have to read from a limited pool of free books, or use torrents or book download websites, or use software to remove the DRM. This is not hard, but unfortunately you can't just get started reading any book from Kindle in 5 minutes. It might take you a couple hours to set up DRM removal software, or half an hour to find a book downloading website or get a torrent program.

Buying e-books and removing the DRM so you can read them in your own choice of software is completely reasonable and moral. If you don't distribute the books to others, you aren't doing anything wrong. You pay for the book, and then read your book, that you own, with the software of your choice. That's it. What's wrong with that? Nothing. It also offers the best selection of books available. People should learn how to do this. It isn't very hard.

Mental Energy

So with RSVP you read a book in 3 hours instead of 6 hours. Is that harder or easier, once you know how? Easier. It leaves you less drained, less fatigued, less mentally tired. But how much easier? Since you spend half as much time reading, does that mean it takes half as much mental energy? No. For every hour using RSVP, you get more mentally tired than one hour of regular reading.With RSVP, you get less mentally tired per page read, but more tired per minute of reading.

A rough estimate is that while you're saving 50% for time, you're only saving 25% for mental energy.

Say regular reading finishes 100 words in 100 units of time and uses up 100 units of mental energy. Then RSVP would read 100 words in 50 units of time and use up 75 units of mental energy. This is a good thing.

Busy People

Let's hypothetically suppose that RSVP used up 125 mental energy units instead of 75. Then you'd be saving time but spending more mental energy per word. Would that be good?Certainly not for everyone. But for a lot of people, RSVP would still be very useful in that scenario. People who are low on time would still use RSVP to save time. They'd be effectively trading some mental energy for some time. They'd be converting one resource (mental energy) into another (time). If they are low on time, doing a conversion to save time is useful.

Most people, including most "busy" people, only use a fraction of their mental energy each day anyway. They've got a lot to spare. (Many will lie and say otherwise. The issue is not that they are too mentally fatigued to think more, it's really that they don't like thinking, so they say they are mentally fatigued to excuse their choice not to think much.)

But real RSVP saves both time and mental energy. That makes it such an amazing deal. It'd ridiculous that very few people use it. This is a good example of how people make huge mistakes which makes their quality of life dramatically worse.

The only real reason not to use RSVP is if you don't read. Which would be a big mistake, but for different reasons. Thinking and ideas are important! But I won't go into that here. If you want an explanation, you can read Philosophy: Who Needs It or ask at the Fallible Ideas Email Discussion Group.

Different Types of Mental Energy

There's different types of mental energy. If you get tired from reading and are too mentally fatigued to read more, you can usually still do something else, including listen to an audio book. But they aren't totally different: if you can normally comfortably read for 5 hours in a row, but first you get really tired from playing chess for 10 hours, you'll find you run out of reading mental energy faster. All that chess used up the majority of your reading energy. All sorts of different types of mental energy are linked significantly but not entirely – using one uses a lot of the other, but running out of one often doesn't completely run you out of the other.One consequence is that if you're using mental energy for other parts of your life, and you add in some reading, this is not a zero sum game. Every bit of mental energy going to reading does not mean less mental energy for other tasks. Maybe two thirds of the mental energy for reading has to be subtracted from other tasks, but one third is a bonus.

Audio Books

Audio books make speed reading even more convenient than text books. Lots of audio book software already has an option to turn up the speed. Unfortunately a lot of software only lets you listen at 1.5x or 2x speed, even though a skilled listener can listen at 2.5x or 3x. (A lot of audio books are read very slowly, significantly slower than regular slow text reading.) Please be careful because some software labels 1.5x speed as "2x" and 2x speed as "3x". This mislabelling includes the software from Apple and Amazon (Audible). You can easily test this by playing something at "2x" for 1 minute (using a clock) from the beginning, and then see how far along you are – 90 seconds or 2 minutes in.Audio book selection is limited, but this can be fixed with text-to-speech software such as Voice Dream Reader. (I use the "Paul" voice.) Text-to-speech software now works extremely well. The only problem is if you buy a book on Kindle with DRM, you can't read it with your own choice of software, like we talked about before. That means no RSVP and also no text-to-speech. Unless you remove the DRM, which isn't that hard.

Some people worry that text-to-speech software is harder to understand than human speech. This may have been true in the past, but it is not true today. Actually, text-to-speech is better and easier to understand at high speeds because it's 100% consistent about pronunciation and pacing. Not every single word is pronounced correctly, but most are, and they are always pronounced the same way, and you can still understand it (and if you're really bothered, you can tinker with it and fix how it pronounces words).

How do audio books compare to RSVP and regular reading? Using the same numbers as before where regular was 100/100/100 and RSVP was 100/50/75, audio book speed reading would be 100 words in 75 time using 40 mental energy (audio books without turning up the speed would be more like 100 words in 150 time using 35 mental energy, there's really no good reason to do that. once you're good at this, if you're tired you can just turn the speed down from 2.5x to 2x or something like that, and it feels very easy, there's no reason to use 1x speed).

Audio books are a great way of reading, even though they are slower than RSVP, because they are less mentally draining and still pretty fast. And it's basically free to learn to listen to audio books at higher speeds: just turn it up a little at a time and you'll learn while reading without any separate practice or training or lessons.

The other great thing about audio books is you can easily mulititask. You can easily listen to an audiobook while walking somewhere, while on public transit, while driving, while exercising, while showering (with speakers instead of headphones), or while eating. It works with some other activities too, such as playing video games, though that can require some skill if it's a hard game. Whereas to multitask an audio book with exercise basically requires no skill, anyone can do it right away.

TV and Movies

TV and movies can be watched at higher speeds to save time. Again you can learn this gradually while doing it, so it's basically a free skill that saves a huge amount of time for zero downside. The speed you can watch something depends on factors like how fast they talk and whether they have accents. But once you are good, you can watch most TV and movies using from 2x to 2.5x speed, while being completely comfortable and relaxed. And getting up to being comfortable at 1.5x speed comes pretty quickly and is still fast enough to save a lot of time.Boredom

Another advantage of speed reading, speed listening and speed watching is that it increases the amount of interesting stuff you engage with per minute. It makes the book or show effectively have denser content, so it's more interesting. All the good parts are packed closer together. This is especially valuable if you need to read something for a school class but it's kind of boring. It makes stuff less boring, more interesting. It's also great if you're bored and have trouble finding enough interesting stuff, because it will change some books and shows from too boring to worthwhile.Skimming

There's other types of speed reading, but I think most of them are inferior to RSVP and there's no reason to use them. Most other methods of speed reading are designed to work with paper books, but the fact is software is more powerful and superior to paper books. And a speed reading method that utilizes software (RSVP) is superior to one that doesn't.However, there is a notable form of reading, which is sort of speed reading, which is great. It's called skimming. If you don't need to read every word, don't! This can be a lot faster than even RSVP if you can skip over half the text. And there are plenty of reasons to take a look at something but not read all of it. That's really useful. You might want to see what it is and see if you're interested, but instead of just reading the beginning you skip ahead. A great way to get a sense of a book is to go to a chapter that sounds interesting, read a few paragraphs, skip a few pages, read a few paragraphs, skip a few pages, etc. It's better to adjust what to skip, when, depending what you read though, don't just turn pages thoughtlessly.

RSVP turned up to a really high speed has some similarities to skimming. Both are really fast, and in both cases you miss stuff. What are the main differences? With very fast RSVP, you don't miss any sections of text. Say you're trying to find out if the author addresses a particular counter-argument. He might do that in one paragraph somewhere in the middle of the chapter. If you skim, you could easily miss that paragraph. If you use very high speed RSVP, you'll see every paragraph and you won't miss it. If you're reading too fast you might not understand it, but you'll see the topic and stop and then read that paragraph again.

If you ever want to say something like, "the author doesn't cover topic X" you need to read every sentence, even if very quickly so you don't understand every detail perfectly. But most of the time you don't need to say things like that, and it's no big deal if you miss something, so skimming is great.

I think both skimming and very fast RSVP are underrated. People will try too hard to finish the whole book, or something like that. But a lot of books you can just quickly go through the best parts, look for parts of particular interest to you, etc, and miss a bunch, and you get more value for your time/effort than if you read the whole book.

Books don't have a totally consistent level of quality, and not all the material is equally interesting to you. For most books, the less good parts are not as worthwhile to read for you as just reading one of the better parts from another book (even if that second book is, overall, less good or less interesting to you). Since you aren't going to read all the interesting books – you won't run out – don't hesitate to go through a book quickly. If you can get 75% of the value from the book in 50% of the time, that's absolutely wonderful and you should be thrilled and move on to the next book and do the same thing with it too.

Elliot Temple

| Permalink

| Message (1)