Big Hero 6 movie comments. You should watch the movie before reading this.

SPOILERS

starts with main character (hiro) getting arrested for peaceful activities that should be legal. no one objects to this state of affairs, including hiro himself who thinks the illegal activities are good things to do.

within the first 10 minutes the movie is telling HUGE HUGE HUMONGOUS GIGANTIC SUPER BIG lies about what university is like, by presenting a completely fake school lab scene that’s waaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaay cooler than real ones. Also extremely expensive even after you tone it down from sci-fi to realism.

so then after the utopia-paradise convinces the main character to go to school, he wants to make something awesome to get in. so he ... pulls out a pencil, a pencil sharpener, and paper. umm wait what? this is a sci fi world with advanced robotics technology, this guy is super into technology ... but he doesn’t use an iPad like device or even a computer keyboard for writing, brainstorming, etc? he handwrites?? c’mon. wtf.

so then hiro makes awesome amazing future tech in a short time period, PRIOR TO attending school, in hopes of getting in. note his ability to make this indicates he very much does not need school, even the super awesome fake school.

so then he gets 2 choices: get in to school, or sell out to a capitalist guy criticized for caring about “self-interest”. hiro turns down a fortune at age 14 without really thinking about it or finding out details of his options. which the professor mentor dude and his elder brother both treat as wise.

so after the professor and his brother die, why doesn’t he consider going with the capitalist option at that point?

chasing baymax scene is kinda ridiculous. the SLOW MOVING robot keeps being in sight a little ways away, but hiro is constantly running full speed and just missing him and not catching up.

no, low battery does not make robots act drunk.

Hiro lies to his parent figure when leaving the house to chase Baymax, and lies more when returning. no significant explanation is necessary. plenty of kids watching who understand the necessity of heavily lying to parents...

movie plays a lot on the theme of an outsider (the robot) who doesn’t understand cultural stuff that normal people take for granted. this lack of understanding is supposed to be humorous. one concrete example is when the robot doesn’t understand fist bumping.

the policeman completely ignores his report of major violence and danger. he treats robots like a fantasy story, even though this sci-fi world has significant robotics and Hiro is giving the police report with a very impressive robot standing next to him. that’s really bad. i think it’s a bit unrealistic. i don’t think police are quite that bad. at least for adults. maybe they are when a kid is doing the report. i don’t know. in any case, i think it comes off as identifiable to the children in the audience – the authorities in their lives (parents and teachers primarily) repeatedly won’t believe them, ignore reasonable requests, make them try to deal with stuff on their own. that theme is very realistic for kids.

so why doesn’t Baymax save any photographs or videos from the camera it uses for eyesight? that sure would have been convenient at the police station. also Hiro should have taken a picture or video with his smartphone or something.

the friend group in the movie are all very strong personality archetypes. this isn’t very realistic. most people are more mild, with a bit of some archetypes but also a lot of mild-mannered normalcy, compromises, etc. there’s general pressure on people not to be strong outliers. the strong extremes of the archetypes are a bit rare, but more entertaining and striking for movies.

after the go to the mansion and get upgrades, Hiro does a few grand in property damage while having Baymax show off his new rocket fist. no one takes notice of this.

when Hiro goes flying around on Baymax, he almost dies a few times. people don’t take brushes with death nearly seriously enough. they are too focused on the actual outcome instead of something more like the set of possible outcomes and their probabilities.

they also ignore the issue of acceleration forces acting on Hiro while he rides (and he’s frequently only attached to Baymax at like 2 points on his feet or knees which would put a ton of strain on those points). going high speed then changing direction very abruptly to go high speed another way requires a better setup or you like blackout or die.

also they fly around the city for all to see, which is really stupid given their intent to fight someone using this technology. better if it’s a secret, keep the element of surprise.

now i figure they have enough evidence to get help from the cops or military. like they have Baymax’s medical scan of the badguy. Baymax has some data. and their car got trashed and they got chased through the streets, some stuff must have gotten on camera and had witnesses. but they don’t consider that at all, even though the micro-bot army is VERY VERY dangerous and serious and bringing in the military really is called for, and it’s extremely reckless and stupid for them to go after the guy themselves and also to do it without leaving full data and notes behind in case they die so other the military at least has their info in order to fight if necessary.

the girls in the friend group are real thin.

for the fight after watching the teleporter video, they mostly fight in a sort of one-at-a-time way that is really convenient for showing what’s happening more easily i guess, but pretty damn lame if you think about it.

so Hiro himself, the protagonist a lot of the audience is meant to identify with, becomes kinda murderous pretty abruptly. that’s treated as just how even the best people are.

@Baymax tests and creation: so the first time it gets past saying Hi, on attempt 84 (a very low number, presented as a very high number), the medical scan works perfectly on the first try of that subsystem. that’s completely ridiculous.

so the capitalist dude doesn’t turn out to be the badguy. also he actually spends huge piles of government money.

the professor guy is pretty dumb. his daughter participated in the test voluntarily. now he wants to be a murderer. he also doesn’t seem to mind doing millions of dollars of property damage that hurts people other than his target, and he doesn’t seem to mind trying to kill Hiro and friends who has has no grudge against.

i don’t think the intended moral of the story is that irrational emotional family attachments are one of the more dangerous forces remaining internal to peaceful Western society. yet that’s kinda there.

Hiro and friends have massively higher tolerance for danger and brushes with death than most people. also they are wrong and it’s bad. and it doesn’t even occasion comment in the movie.

so remember how the acceleration was really unrealistic when flying earlier? it’s a lot worse now. when they are through the portal, Hiro climbs onto the pod thing the girl is in and hangs on to that while Baymax flies around pushing it. so now he doesn’t have the special attachment points between his suit and Baymax that kept him from falling off before. but he doesn’t fall off. cuz ... no reason. he barely even tries to hold on, sometimes letting go with his hands and just kinda crouching on it.

shooting the rocket fist to get them out is pretty stupid. cuz he could just shoot it directly away from them and that’d work too and then Baymax would be saved too. it’s not like there was a big hurry, they waste time saying bye. #physics

don’t they have backup copies of the robot’s memory cards, design schematics, etc, etc???

the news broadcast indicates the heroes don’t get credit. why? and how do they manage anonymity after all the public displays?

Elliot Temple

| Permalink

| Messages (0)

People Are More Interesting At The Margins

People are more interesting at the margins.

Elliot Temple

| Permalink

| Message (1)

Two Firefight Errors

This post contains large spoilers for the book Firefight by Brandon Sanderson. He is my favorite fantasy author. In this post, I explain two errors I noticed in the book.

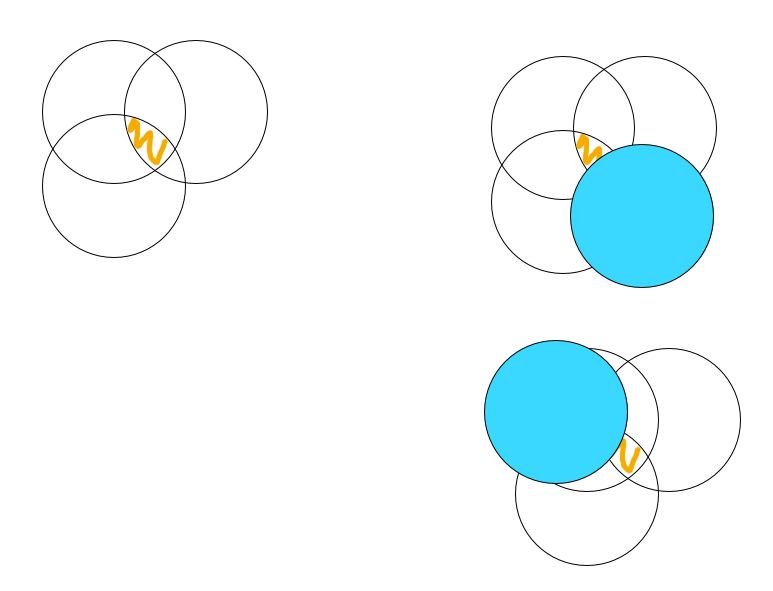

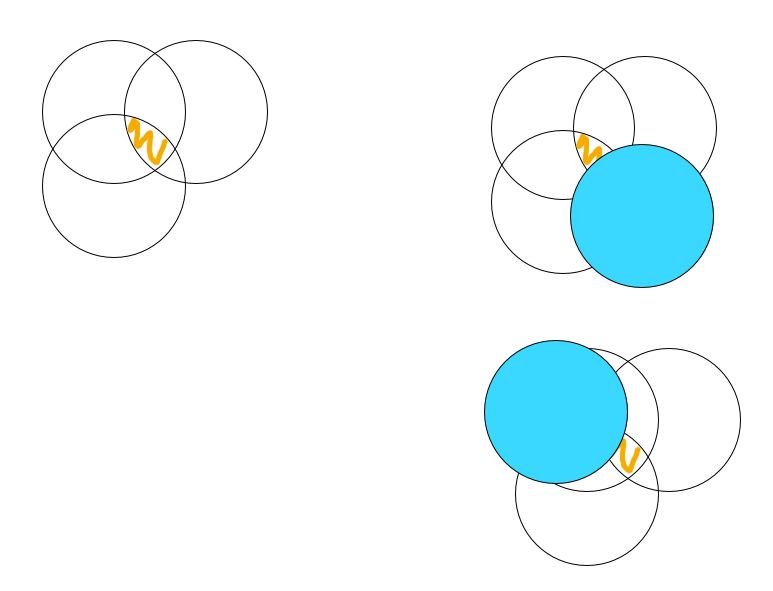

The left diagram shows how they were trying to find Regalia’s base. That part makes sense. Every time she appears, you know she’s in a radius of that point, so you draw a circle. The overlap of all circles is where her base could be. (You can also have circles for places she didn’t appear, which then rule out that circle.)

To narrow it down further, you need any circle which overlaps part of the remaining possible city area, not none or all. As you can see in the right hand diagrams, this new circle could be near an existing circle, or off in a new area. Both work. But the book says:

1) Is Regalia a High Epic?

“Abigail isn’t a High Epic,” Tia said.That made sense to me, but it's contradicted when David kills Regalia:

“What?” Exel said. “Of course she is. I’ve never met an Epic as powerful as Regalia. She raised the water level of the entire city to flood it. She moved millions of tons of water, and holds it all here!”

“I didn’t say she wasn’t powerful,” Tia said. “Only that she isn’t a High Epic—which is defined as an Epic whose powers prevent them from being killed in conventional ways.”

“No!” My arms trembled. I shouted, then brought the blade down.The page with the ISBN number also calls Regalia a High Epic:

And killed my second High Epic for the day.

Summary: “David and the Reckoners continue their fight against the Epics, humans with superhuman powers, except they may have met their match in Regalia, a High Epic who resides in Babylon Restored, the city formerly known as the borough of Manhattan”— Provided by publisher.

2) Geometry

The left diagram shows how they were trying to find Regalia’s base. That part makes sense. Every time she appears, you know she’s in a radius of that point, so you draw a circle. The overlap of all circles is where her base could be. (You can also have circles for places she didn’t appear, which then rule out that circle.)

To narrow it down further, you need any circle which overlaps part of the remaining possible city area, not none or all. As you can see in the right hand diagrams, this new circle could be near an existing circle, or off in a new area. Both work. But the book says:

From what I eventually worked out, my points had helped a lot, but we needed more data from the southeastern side of the city before we could really determine Regalia’s center base.That doesn’t make sense. The key thing is the distance of data points so they overlap part of the remaining area where the base could be. This can be done from any direction.

Elliot Temple

| Permalink

| Messages (0)

Mindless or Perfect?

https://news.ycombinator.com/item?id=8859974

On the one hand is people are biased in ways they aren't aware of, and aren't in control of their lives. And therefore are slaves to genes.

On the other hand is, since people aren't animal slaves, people aren't super biased, super unaware of their biases, and so on.

I think the truth is pretty straightfoward here: people are hugely biased, unaware of it, and bad at controlling their lives. But it doesn't mean they are slaves. They could do something about it. But evading and dismissing the issue, or accepting that's how people are, won't fix it.

Control over your life is possible but not automatic. It shouldn't be treated as either both possible and automatic, or neither. That's the false dichtomy again.

What will fix this problem is philosophy. Read Ayn Rand and Karl Popper, among others. Or come discuss this matter at the Fallible Ideas Discussion Group. Learn better ideas and integrate them into your life so you actually live by them.

The problem of bias (and more generally mistakes) is real, but also solvable. You don't have to choose from a false dichotomy of denying the problem exists (or downplaying it heavily) or else accepting the problem as a negative feature of human life. You can recognize the problem exists and then take steps to deal with it. A lot is actually known about how to handle this, but most people don't bother learning it.

They're not wrong in that people often rationalize their 'instinctual' choices, but to imply that nobody is cognizant of any of their thoughts or biases and that we're all slaves to our lizard brains is a bit of a stretch.This is a massive false dichtomy.

On the one hand is people are biased in ways they aren't aware of, and aren't in control of their lives. And therefore are slaves to genes.

On the other hand is, since people aren't animal slaves, people aren't super biased, super unaware of their biases, and so on.

I think the truth is pretty straightfoward here: people are hugely biased, unaware of it, and bad at controlling their lives. But it doesn't mean they are slaves. They could do something about it. But evading and dismissing the issue, or accepting that's how people are, won't fix it.

Control over your life is possible but not automatic. It shouldn't be treated as either both possible and automatic, or neither. That's the false dichtomy again.

What will fix this problem is philosophy. Read Ayn Rand and Karl Popper, among others. Or come discuss this matter at the Fallible Ideas Discussion Group. Learn better ideas and integrate them into your life so you actually live by them.

The problem of bias (and more generally mistakes) is real, but also solvable. You don't have to choose from a false dichotomy of denying the problem exists (or downplaying it heavily) or else accepting the problem as a negative feature of human life. You can recognize the problem exists and then take steps to deal with it. A lot is actually known about how to handle this, but most people don't bother learning it.

Elliot Temple

| Permalink

| Messages (0)

Lying About Poker

http://www.europokerpro.com/games/fixed-limit-holdem-rooms/

if you’re trying to make money at poker, you cannot be a player who steams. if you’re a steamer, you should expect to be a losing player.

the article tip implies it’s OK to be a steamer and try to make money at poker. this is a dirty and nasty lie, encouraging losing players to lose more money (in the disguise of tips to help them win money).

http://www.thepokerbank.com/strategy/other/winrate/

let’s check more info by searching fixed limit info in particular:

http://forumserver.twoplustwo.com/17/small-stakes-limit/current-expected-ceiling-earning-flh-hu-shorthanded-1486606/

btw who are the suckers who are fooled? they must be dumb. i don't play poker. maybe it'd help if they were better philosophers. i catch lies like this because i have good thinking methods.

5: When you start steaming give up for the day and save your bankroll.this is super bad advice if you’re trying to make money (rather than looking at it as paying money for fun). which i think is most of the audience (some of the other tips look clearly oriented to people trying to make money, e.g. 2 and 4).

if you’re trying to make money at poker, you cannot be a player who steams. if you’re a steamer, you should expect to be a losing player.

the article tip implies it’s OK to be a steamer and try to make money at poker. this is a dirty and nasty lie, encouraging losing players to lose more money (in the disguise of tips to help them win money).

The edges are really small in all the fixed limit games. For an example you can rarely expect to earn more than 5Big bets/100 hands on average even in the good gamesand this is lying about what people can reasonably expect to win. 5 big bets per 100 hands is not “really small”. it sounds pretty great.

http://www.thepokerbank.com/strategy/other/winrate/

• 1 – 4 bb/100 = Great. A solid winrate if you can sustain it.i don’t know fixed limit holdem that well, but a big bet in it (they were talking 5 big bets/100 hands) is (always?) double the big blind. so they might have just called 10big blinds/100 as a “really small” edge!?!?

• 5 – 9 bb/100 = Amazing. This is a very high winrate at any level. Consider moving up.

• 10+ bb/100 = Immense. Very, very few have a winrate like this. You probably have a small sample size though.

let’s check more info by searching fixed limit info in particular:

http://forumserver.twoplustwo.com/17/small-stakes-limit/current-expected-ceiling-earning-flh-hu-shorthanded-1486606/

I think 3.5 big bets per 100 hands (after rake, but before rakeback and bonuses?) is not even close to attainable online right now. I'm not sure what is, but more HU and shorthanded targeting weaker players would obviously boost your winrate. But the best I seem to hear of is around 1.5 BB/100 if avg players at table was like 4 or something. I'm not even 100% on those figures bc it's all pretty much anecdotal.and

People that won 3 BB/100 were massive bumhunters and/or constantly buttoning people which is basically cheating. They were not playing regularly and winning 3 BB/100.http://www.pokerstrategy.com/forum/thread.php?threadid=37634

In Fixed Limit Holdem we know that good player will have average income 1-2 BB (Big Bets) per 100 hands.so the article was super fucking lying about 5 big bets per 100 hands being “really small”. completely and utterly lying to people about what sort of income poker might offer. to lure in suckers, i guess.

btw who are the suckers who are fooled? they must be dumb. i don't play poker. maybe it'd help if they were better philosophers. i catch lies like this because i have good thinking methods.

Elliot Temple

| Permalink

| Messages (0)

Understanding Objectivism Comments

Comments on excerpts from Understanding Objectivism by Leonard Peikoff.

The specifics here are terrible too. Who is 100% "thoroughly honest"? No one. No one is literally perfect on honesty. Which is great – it means there is room for improvement, there are opportunities to be better (for those who look for them).

Rather than emphasizing self-improvement maximally, Peikoff starts attributing success to vague, inborn(?), magical "genius".

Peikoff's argument here is careless too. If he sanctioned a lot of evil, it wouldn't make him the second worst person, after Kant. Lots of people have done that, and Kant (and others) did a lot of other stuff wrong too.

As I read this, Peikoff basically confesses to huge sins and flaws, makes a half-hearted defense, and then says it can't be that bad because then life would be too hard and too demanding, and surely morality can't demand anyone be more than Peikoff is.

There's so much wrong here. Peikoff did not have to go to college. He did sanction college by going and then keeping mostly quiet and going along with it. You know Roark got expelled from school? And Roark says he should have quit earlier. Peikoff acts completely unlike Roark, and makes himself fit in enough to pass, but can't conceptualize that he may have done something wrong. Isn't that weird? He isn't taking Roark or Rand seriously. Rand is pretty clear about the moral sanction issue, and Peikoff's attitude is that a particular version of refusing to sanction evil (getting equal speaking time to his professors) is "ludicrous" and anyway he can't be so bad, so let's just start compromising principles.

"So it was as simple as that" is a bad comment to end with. It doesn't add anything. And it's wrong – the issues aren't simple. Maybe Peikoff hasn't noticed there's lots of interesting stuff here?

Q: Ayn Rand once said that the attribute that most distinguished her was not intelligence but honesty. Could she have been referring to a concept that subsumes the virtue of honesty, and the lack of any innocent dishonesty such as rationalism?Peikoff regards himself as 100% honest and using that as a premise to reach conclusions about reality (including ones contrary to Ayn Rand). This is an arrogant and ridiculous method. You should not assume your own greatness as the starting point of your arguments, and see what the world is like only on that assumption.

A: “Innocent dishonesty” strikes me as a self-contradiction. If a person is dishonest, then he’s guilty; if he’s innocent, he tried his best, then he was honest. So you probably mean the correct method—she was not only honest, but had the correct method of thinking. And was that simply the result of honesty on her part? I have to respectfully disagree with Miss Rand’s self-assessment. I never agreed with that, and I argued with her for decades on that point. I regarded myself as thoroughly honest, and I never came anywhere near coming up with her philosophy on my own. I think that to explain the origination of an actual new philosophy, honesty is a valuable and necessary condition, but does not go the whole way. You cannot get away from the fact that you have to be a genius on top of being honest.

The specifics here are terrible too. Who is 100% "thoroughly honest"? No one. No one is literally perfect on honesty. Which is great – it means there is room for improvement, there are opportunities to be better (for those who look for them).

Rather than emphasizing self-improvement maximally, Peikoff starts attributing success to vague, inborn(?), magical "genius".

Let me take a different situation. In college, should you let the professor in the class know you disagree every time you do? Is your silence a sanction of evil? If it is, I certainly sanctioned a tremendous amount of evil in my fourteen years as a college student, so I have to come out as the world’s greatest monster, next to Kant. This was my policy: Sometimes I spoke up, and sometimes I didn’t, according to a whole constellation of factors. I realized that I couldn’t speak up every time, because I disagreed with everything. And since it was philosophy, every disagreement was vital, so I would have had to have equal time with the professor and the rest of the class, which would be ludicrous. So I simply had to accept from the outset, “An awful lot is going to go by that is irrational, horrendous, depraved, and I will be completely silent about it. I have no choice about that.”This has the same kind of issue as the previous quote. Here Peikoff's reasoning starts with the premise that he's pretty good, and doesn't consider that he may be making a big mistake. Rather than argue the substance of the issue, he just kinda treats any criticism of himself as an absurdity to reject.

Peikoff's argument here is careless too. If he sanctioned a lot of evil, it wouldn't make him the second worst person, after Kant. Lots of people have done that, and Kant (and others) did a lot of other stuff wrong too.

As I read this, Peikoff basically confesses to huge sins and flaws, makes a half-hearted defense, and then says it can't be that bad because then life would be too hard and too demanding, and surely morality can't demand anyone be more than Peikoff is.

There's so much wrong here. Peikoff did not have to go to college. He did sanction college by going and then keeping mostly quiet and going along with it. You know Roark got expelled from school? And Roark says he should have quit earlier. Peikoff acts completely unlike Roark, and makes himself fit in enough to pass, but can't conceptualize that he may have done something wrong. Isn't that weird? He isn't taking Roark or Rand seriously. Rand is pretty clear about the moral sanction issue, and Peikoff's attitude is that a particular version of refusing to sanction evil (getting equal speaking time to his professors) is "ludicrous" and anyway he can't be so bad, so let's just start compromising principles.

Now and then I would speak up; much of the time I kept silent. And I do not regard that as a sanction of evil, and I could certainly not have survived fourteen years of university if every moment had to be total war against every utterance.Why go to fourteen years of evil university? There are alternatives. He did sanction it. He describes himself sanctioning it and his only defenses consist of thinking there were no better alternatives (while hardly considering any) and thinking he can't be too bad.

For instance, once in a very early phase I tried to be a thorough intellectualist—that is, I was going to function exclusively cerebrally, without the aid of emotions (I was young at the time). And I remember very clearly that I went to a movie with the idea of having absolutely no emotions—that is, they would have nothing to do with my assessment of the movie and were just going to be pushed aside. I was going to try to judge purely intellectually as the movie went by. And I had a checklist in advance, certain criteria: I was going to judge the plot, the theme, the characters, the acting, the direction, the scenery, and so on. And my idea was to formulate to myself in words for each point where I thought something was relevant, how it stood on all the points on my checklist. And to my amazement, I was absolutely unable to follow the movie; I did not know what was going on. I needed to sit through it I can’t remember how many times, and I discovered that what you have to do is simply react, let it happen, feel, immerse yourself in it. And what happens is that your emotions give you an automatic sum. You just simply attend to it with no checklist, no intellectualizing, no thought, just watch the movie, like a person.Peikoff used a bad intellectual method of movie watching, it didn't work, and then he just gives up completely instead of trying other intellectual methods. That's dumb. It's perfectly possible to watch movies a lot better than passively, and to think during them, while still following the plot. His checklist approach sounds pretty bad, but it's not that or nothing. That's a false dichotomy.

Q: What were Ayn Rand’s reasons for not wanting to be a mother?I thought this was notable. For one thing, Rand did a lot of teaching, including with Peikoff personally. As Peikoff describes it (in various places), Rand helped him along a great deal, incrementally, over years and years. I think Rand couldn't find enough equals or peers; I think it's interesting Peikoff reports she wanted that.

A: Primarily I would say because she was committed from a very early age to a full-time career as a novelist and writer. She did not want to divert any of her attention to anything else. She wanted to pursue that full-time, and it was simply not worth it to her to divert any time from that goal, by her particular hierarchy of interests and values. Beyond that, she had no interest in teaching. She was very different, for instance, from me in that regard. She was not interested in taking someone and bringing them along step by step, which is essential to being a parent. She wanted a formed mind that she could talk to on the level as an equal. She had more of the scientific motivation, rather than the pedagogical motivation. So it was as simple as that.

"So it was as simple as that" is a bad comment to end with. It doesn't add anything. And it's wrong – the issues aren't simple. Maybe Peikoff hasn't noticed there's lots of interesting stuff here?

Sometimes you have to expect to be momentarily overcome with the sheer force of the evil in a given situation. I want to speak for myself, from this point on, from my own experience, because I’m not prepared to make a universal law out of this, so I offer it to you for what it’s worth. There are times and situations where, despite my knowledge of philosophy, I feel overwhelmed by the evil in the world—I feel isolated, alienated, lonely, bitter, malevolent—and this is, to me, inescapable at times in certain contexts. I’ll give you an example. A few weeks ago, I went to a debate at a large university, on the subject of the nuclear freeze. One of the debaters, my friend, was eloquent, but it was a hopeless situation. The audience of college students was closed, irrational, hostile, dishonest by every criterion outlined tonight. They wouldn’t listen for a moment, they were rude—they were real modern hooligans—and when they did speak up, it was utterly without redeeming features—a whole array of out-of-context questions, sarcasm, disintegrated concretes—it was a real modern spectacle in the worst sense of the term. After a couple of hours of this, I was angry, I was resentful, I was hostile. And I felt (and I underscore the word “felt”), “This is the way the world is. What is the point of fighting it? They don’t want to know. I’m going to retire and stop lecturing and let the whole thing blow up, and to hell with it.” I was depressed. And of course, once I was in that mood, I was more negative about everything, so when I saw the headlines in the Times the next day, I felt worse. Even the long lines at the bank were further evidence that the world is rotten.How is this not a mistake!? Again I read it as Peikoff describing another big mistake he made. But then he doesn't see himself as having done anything wrong. Why? He tells us about why this isn't good, it's clear enough why it'd be better not to handle it this way, and yet somehow this non-ideal isn't a mistake for some unstated reason.

The point here is that I don’t think that I made a mistake. I think you have to react to concretes.

Elliot Temple

| Permalink

| Messages (0)

Leonard Peikoff Betrays Israel

From Understanding Objectivism, the book version, and this is from a student talking:

Now about Israel, there's a really big problem here. The issue is, the Israel example shouldn't be controversial. By acting as if it's controversial, he is deferring to anti-semites. He's treating their disagreement as a legitimate controversy, and sanctioning it. Instead of Roark's "don't think of them" type approach, he's treating evil as this important thing and saying to change your actions according to the demands of evil. You have to choose different examples because some anti-semites will get offended by the Israel example, you have to think of them and give their anti-semitic concerns due consideration.

He's letting anti-semites drag the conversation into a distorted reality where Israel's right to exist is a controversy. That's granting them way too much. There are some issues where you can say it's controversial and respect the other side, but there are other issues where you must not grant them the sanction of being legitimate opponents whose dissent constitutes an intellectual controversy. Anti-semitism hasn't made the issue of Israel's self-defense intellectually or rationally controversial.

Peikoff went out of his way to try to stop a student from acting according to reality – a reality in which Israel's self-defense is a clear cut example similar to the World War II example. Peikoff demanded the student show greater respect to anti-semites, and help them fake reality by pretending there is an intellectual controversy where there isn't one. This is a betrayal of Israel.

PS In fairness, I want to add that I mostly like the book so far, I think it's pretty good, you could learn some things from it.

Now consider three examples, a couple of them historical. One, Adolf Hitler announces that he’s going to take over as much of the world as possible. And in each instance, when his armies march into another country, he is ready with a pretext: that he is only acting in defense of the German people. The second example would be, in 1967, the State of Israel, anticipating, by means of military intelligence, that they were about to be attacked on all sides, attacked first and blew up the opposing air forces, and claimed that they did that in self-defense.Never mind what he's talking about, it doesn't matter to my point. Just note the Israel example. Then when Leonard Peikoff is commenting, I'll show you this out of order. Second he says:

This was certainly not a rationalistic presentation. He started with some examples—leaving aside the Israel one, which was inappropriate for the reasons we just mentioned—but Hitler is certainly a good example on the topic of force;OK, now what are the reasons Peikoff says not to use the Israel example?

So the rule here is, do not pick controversial concretes. Pick a nice range of concretes. But when you’re trying to understand, the examples should be simple and straightforward. Then, when you grasp it, you can take up trickier cases. So it is a bad idea to combine concretizing with devil’s advocacy. I learned this teaching elementary logic, and I thought I’d get two birds for the price of one, and in illustrating a certain fallacy, which is a simple fallacy to understand, I threw in an argument for political isolationism, and the example aroused the class, and it became so controversial that they began to challenge the logical point involved, because they disagreed with the political point. And I lost the entire logical issue on the example. I learned from that that examples cannot be controversial; they have to be illuminating.First, an aside, Peikoff is wrong here. He should have learned the lesson that his class had this big misconception, this big hole in their rationality. When they "began to challenge the logical point involved, because they disagreed with the political point", that was a huge thinking and methodology mistake the students were making. Which Peikoff correctly recognized. But then instead of teaching them how to think better, he thought in the future he should avoid this issue coming up. Instead of teaching the students to be good at this, his plan is to avoid this thing where their flaws come out. :(

Now about Israel, there's a really big problem here. The issue is, the Israel example shouldn't be controversial. By acting as if it's controversial, he is deferring to anti-semites. He's treating their disagreement as a legitimate controversy, and sanctioning it. Instead of Roark's "don't think of them" type approach, he's treating evil as this important thing and saying to change your actions according to the demands of evil. You have to choose different examples because some anti-semites will get offended by the Israel example, you have to think of them and give their anti-semitic concerns due consideration.

He's letting anti-semites drag the conversation into a distorted reality where Israel's right to exist is a controversy. That's granting them way too much. There are some issues where you can say it's controversial and respect the other side, but there are other issues where you must not grant them the sanction of being legitimate opponents whose dissent constitutes an intellectual controversy. Anti-semitism hasn't made the issue of Israel's self-defense intellectually or rationally controversial.

Peikoff went out of his way to try to stop a student from acting according to reality – a reality in which Israel's self-defense is a clear cut example similar to the World War II example. Peikoff demanded the student show greater respect to anti-semites, and help them fake reality by pretending there is an intellectual controversy where there isn't one. This is a betrayal of Israel.

PS In fairness, I want to add that I mostly like the book so far, I think it's pretty good, you could learn some things from it.

Elliot Temple

| Permalink

| Messages (0)

Aubrey de Grey Discussion, 24

I discussed epistemology and cryonics with Aubrey de Grey via email. Click here to find the rest of the discussion. Yellow quotes are from Aubrey de Grey, with permission. Bluegreen is me, red is other.

But see my comments below. I don't think we're at an impasse. I think what you said here was particularly productive.

In this case, there was a misunderstanding. You took "explained" to mean, "make [a situation] clear to someone by describing it in more detail". But I meant, "be the cause of". (Both of those are excerpts from a dictionary.) I consider bad epistemology the cause of the sociological problems, and CR the solution. I wasn't talking about giving abstract explanations with no purpose in reality. I'm saying philosophy is they key issue behind this sociological stuff.

I regard this sort of passing over large disagreements as a methodological error, which must affect your discussions with many people on all topics. And it's just the sort of topic CR offers better ideas about. And I think the outcome here – a misunderstanding that wouldn't have been cleared up if I didn't follow up – is pretty typical. And misunderstandings do happen all the time, whether they are getting noticed and cleared up or not.

I think the sex issue is a great example. Let's focus on that as a representative example of many sociological issues. You think CR has nothing to do with this, but I'll explain how CR has everything to do with it. It's a matter of ideas, and CR is a method of dealing with ideas (an epistemology), and such a method (an epistemology) is necessary to life, and having a better one makes all the difference.

Chart:

Epistemology -> life ideas -> behavior/choices

What does each of those mean?

1) Epistemology is the name of one's **method of dealing with ideas**. That includes evaluating ideas, deciding which ideas to accept, finding and fixing problems with ideas, integrating ideas into one's life so they are actually used (or not), and so on. This is not what you're used to from most explicit epistemologies, but it's the proper meaning, and it's what CR offers.

2) Life ideas determine one's behavior in regard to sex, and everything else. This is stuff like one's values, one's emotional makeup, one's personality, one's goals, one's preferences, and so on. In this case, we're dealing with the person's ideas about sex, courtship and integrity.

3) Behavior/choices is what you think it means. I don't have to explain this part. In this case it deals with the concrete actions taken to pursue the irrational woman.

You see the sex example as separate from epistemology. I see them as linked, one step removed. Epistemology is one's method of dealing with (life) ideas. Then some of those (life) ideas determine sexual behavior/choices.

Concretizing, let's examine some typical details. The guy thinks that sex is a very high value, especially if the woman is very pretty and has high social status. He values the sex independent of having a moral and intellectual connection with her. He's also too passive, puts her on a pedestal, and thinks he'll do better by avoiding conflict. He also thinks he can compromise reason in his social life and keep that separate from his scientific life. (Life) ideas like these cause his sexual behavior/choices. If he had different life ideas, he'd change his behavior/choices.

Where did all these bad (life) ideas come from? Mainly from his culture including parents, teachers, friends, TV, books and websites.

Now here's where CR comes in. Why did he accept these bad ideas, instead of finding or creating some better ideas? That's because of his epistemology – his method of dealing with ideas. His epistemology let him down. It's the underlying cause of the cause of the mistaken sexual behavior/choices. A better epistemology (CR) would have given him the methods to acquire and live by better life ideas, resulting in better behavior/choices.

Concretizing with typical examples: his epistemology may have told him that checking those life ideas for errors was unnecessary because everyone knows they're how life works. Or it told him an ineffective method of checking the ideas for errors. Or it told him the errors he sees don't matter outside of trying to be clever in certain intellectual discussions. Or it told him the errors he sees can be outweighed without addressing them. Or it told him that life is full of compromise, win/lose outcomes are how reason works, and so losing in some ways isn't an error and nothing should be done about it.

If he'd used CR instead, he would have had a method that is effective at finding and dealing with errors, so he'd end up with much better life ideas. Most other epistemologies serve to intellectually disarm their victims and make it harder to resist bad life ideas (as in each of the examples in the previous paragraph). Which leads to the sociological problems that hinder science.

Everyone has an epistemology – a method of dealing with ideas. People deal with ideas on a daily basis. But most people don't know what their epistemology is, and don't use an epistemology that can be found in a book (even if they say they do).

The epistemologies in books, and taught at universities, are mostly floating abstractions, disconnected from reality. People learn them as words to say in certain conversations, but never manage to use them in daily life. CR is not like that, it offers something better.

Most people end up using a muddled epistemology, fairly accidentally, without much control over it. It's full of flaws because one's culture (especially one's parents) has bad ideas about epistemology – about the methods of dealing with ideas. And one is fallible and introduces a bunch of his own errors.

The only defense against error is error-correction – which requires good error-correcting methods of dealing with ideas (epistemology) – which is what CR is about. It's crucial to learn about what one's epistemology is, and improve it. Or else one will – lacking the methods to do better – accept bad ideas on all topics and have huge problems throughout life.

And note those problems in life include problems one isn't aware of. Thinking your life is going well doesn't mean much. The guy with the bad approach to sex typically won't regard that as a huge problem in his life, he'll see it a different way. Or if he regards it as problematic, he may be completely on the wrong track about the solution, e.g. thinking he needs to make more compromises with his integrity so he can have more success with women.

PS This is somewhat simplified. Epistemology has some direct impact, not just indirect. And I don't regard the sociological problems as the only main issue with science, I think bad ideas about how to do science (e.g. induction) matter too. But I think it's a good starting place for understanding my perspective. Philosophy dominates EVERYTHING.

Continue reading the next part of the discussion.

If you want to stop talking, or adjust the terms of the conversation (e.g. change the one message at a time back and forth style), please say so directly because silence is ambiguous.Did you receive my email?Hi - yes, I got it, but I couldn’t think of anything useful to say.

But see my comments below. I don't think we're at an impasse. I think what you said here was particularly productive.

We have reached an impasse in which you insist on objecting to my failure to address various of your points, but I object to your failure to address my main point, namely that there is no objective measure of the rebuttability of a position. I am grateful for your persistence, since it has certainly helped me to gain a better understanding of the rationality IN MY OWN TERMS, i.e. the internal consistency, of my position, and in retrospect it is only because of my prior lack of that understanding that I didn’t zero in sooner on that main point as the key issue. But still it is the main point. I don’t see why I should take the time to read things to convince me of something that I’m already conceding for sake of argument, i.e. that Aubreyism is epistemologically inferior to Elliotism. And I also don’t see why I should take the time to work harder to convince you of the value of cryonics when you haven’t given me any reason to believe that your objections (i.e. your claim that Alcor’s arguments are rebuttable in a sense that my arguments for SENS are not, and moreover that that sense is the correct one) are objective.When I say something you think is nonsense, if you ignore that and try to continue the rest of the conversation, we're going to run into problems. I meant what I said, and it's important to my position, so please treat it seriously. By ignoring those statements, it ends up being mysterious to me why you disagree, because you aren't telling me your biggest objections! They won't go away by themselves because they are what I think, not random accidents.

Also, for a lot of the things I haven’t replied to it’s because I’m bemused by your wording. To take the latest case: when I’ve asked you for examples where science could have gone a lot faster by using CR rather than whatever else was used, and you have cited cases that I think are far more parsimoniously explained by sociological considerations, you’ve now come back with the suggestion that "Lots of the sociological considerations are explained by the philosophical issues I'm talking about”. To me that’s not just a questionable or wrong statement, it’s a nonsensical one. My point has nothing whatever to do with the explanations for the sociological considerations - it is merely that if you accept that other issues than the CR/non-CR question (such as the weight that rationalists give to the views of irrationalists, because they want to sleep with them or whatever) slow things down, you can’t argue that the CR/non-CR question slowed them down.

In this case, there was a misunderstanding. You took "explained" to mean, "make [a situation] clear to someone by describing it in more detail". But I meant, "be the cause of". (Both of those are excerpts from a dictionary.) I consider bad epistemology the cause of the sociological problems, and CR the solution. I wasn't talking about giving abstract explanations with no purpose in reality. I'm saying philosophy is they key issue behind this sociological stuff.

I regard this sort of passing over large disagreements as a methodological error, which must affect your discussions with many people on all topics. And it's just the sort of topic CR offers better ideas about. And I think the outcome here – a misunderstanding that wouldn't have been cleared up if I didn't follow up – is pretty typical. And misunderstandings do happen all the time, whether they are getting noticed and cleared up or not.

I think the sex issue is a great example. Let's focus on that as a representative example of many sociological issues. You think CR has nothing to do with this, but I'll explain how CR has everything to do with it. It's a matter of ideas, and CR is a method of dealing with ideas (an epistemology), and such a method (an epistemology) is necessary to life, and having a better one makes all the difference.

Chart:

Epistemology -> life ideas -> behavior/choices

What does each of those mean?

1) Epistemology is the name of one's **method of dealing with ideas**. That includes evaluating ideas, deciding which ideas to accept, finding and fixing problems with ideas, integrating ideas into one's life so they are actually used (or not), and so on. This is not what you're used to from most explicit epistemologies, but it's the proper meaning, and it's what CR offers.

2) Life ideas determine one's behavior in regard to sex, and everything else. This is stuff like one's values, one's emotional makeup, one's personality, one's goals, one's preferences, and so on. In this case, we're dealing with the person's ideas about sex, courtship and integrity.

3) Behavior/choices is what you think it means. I don't have to explain this part. In this case it deals with the concrete actions taken to pursue the irrational woman.

You see the sex example as separate from epistemology. I see them as linked, one step removed. Epistemology is one's method of dealing with (life) ideas. Then some of those (life) ideas determine sexual behavior/choices.

Concretizing, let's examine some typical details. The guy thinks that sex is a very high value, especially if the woman is very pretty and has high social status. He values the sex independent of having a moral and intellectual connection with her. He's also too passive, puts her on a pedestal, and thinks he'll do better by avoiding conflict. He also thinks he can compromise reason in his social life and keep that separate from his scientific life. (Life) ideas like these cause his sexual behavior/choices. If he had different life ideas, he'd change his behavior/choices.

Where did all these bad (life) ideas come from? Mainly from his culture including parents, teachers, friends, TV, books and websites.

Now here's where CR comes in. Why did he accept these bad ideas, instead of finding or creating some better ideas? That's because of his epistemology – his method of dealing with ideas. His epistemology let him down. It's the underlying cause of the cause of the mistaken sexual behavior/choices. A better epistemology (CR) would have given him the methods to acquire and live by better life ideas, resulting in better behavior/choices.

Concretizing with typical examples: his epistemology may have told him that checking those life ideas for errors was unnecessary because everyone knows they're how life works. Or it told him an ineffective method of checking the ideas for errors. Or it told him the errors he sees don't matter outside of trying to be clever in certain intellectual discussions. Or it told him the errors he sees can be outweighed without addressing them. Or it told him that life is full of compromise, win/lose outcomes are how reason works, and so losing in some ways isn't an error and nothing should be done about it.

If he'd used CR instead, he would have had a method that is effective at finding and dealing with errors, so he'd end up with much better life ideas. Most other epistemologies serve to intellectually disarm their victims and make it harder to resist bad life ideas (as in each of the examples in the previous paragraph). Which leads to the sociological problems that hinder science.

Everyone has an epistemology – a method of dealing with ideas. People deal with ideas on a daily basis. But most people don't know what their epistemology is, and don't use an epistemology that can be found in a book (even if they say they do).

The epistemologies in books, and taught at universities, are mostly floating abstractions, disconnected from reality. People learn them as words to say in certain conversations, but never manage to use them in daily life. CR is not like that, it offers something better.

Most people end up using a muddled epistemology, fairly accidentally, without much control over it. It's full of flaws because one's culture (especially one's parents) has bad ideas about epistemology – about the methods of dealing with ideas. And one is fallible and introduces a bunch of his own errors.

The only defense against error is error-correction – which requires good error-correcting methods of dealing with ideas (epistemology) – which is what CR is about. It's crucial to learn about what one's epistemology is, and improve it. Or else one will – lacking the methods to do better – accept bad ideas on all topics and have huge problems throughout life.

And note those problems in life include problems one isn't aware of. Thinking your life is going well doesn't mean much. The guy with the bad approach to sex typically won't regard that as a huge problem in his life, he'll see it a different way. Or if he regards it as problematic, he may be completely on the wrong track about the solution, e.g. thinking he needs to make more compromises with his integrity so he can have more success with women.

PS This is somewhat simplified. Epistemology has some direct impact, not just indirect. And I don't regard the sociological problems as the only main issue with science, I think bad ideas about how to do science (e.g. induction) matter too. But I think it's a good starting place for understanding my perspective. Philosophy dominates EVERYTHING.

Continue reading the next part of the discussion.

Elliot Temple

| Permalink

| Messages (0)