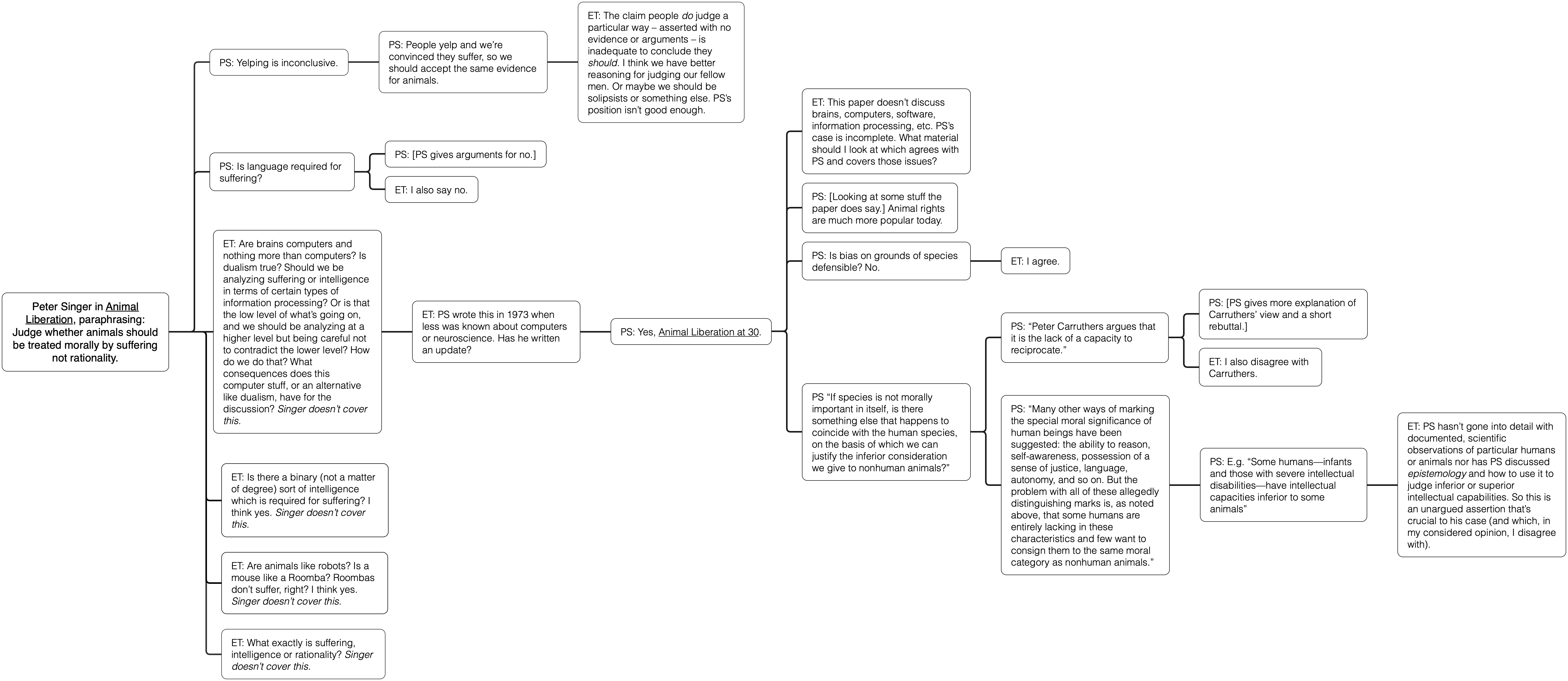

I began organizing the current state of the animal rights debate into a discussion tree diagram. I started with Peter Singer because he's a well known intellectual with pro animal rights writing. I will update the diagram or create additional diagrams if some pro animal rights people point me to literature which addresses my unanswered arguments and questions, or make important arguments in the comments below. I hope they'll do that. I prefer pointers to specific parts of literature unless someone wants to first concede that key arguments for animal rights haven't been written down anywhere (and explain why they haven't been).

The diagram is just an outline. For details about a particular part, ask in comments below. The diagram is meant to show (a piece of) the structure of the debate/discussion. It selectively focuses on points Singer raised and points I consider important. I'm sure other people have written relevant things, but I don't know where to find that, I've done some Bing searches unsuccessfully, and I have other research priorities (such as how to have a rational discussion – this is an experiment for that purpose). My relevant expertise is primarily about epistemology, software and science, not the animal rights literature. I've debated ~25 people on these issues but they typically bring up sources like a YouTube video about how an animal did something that seems intelligent to them.

Click the diagram to expand or view the PDF for selectable text. The source links are clickable in the PDF and are Animal Liberation and Animal Liberation at 30.

Update: I explain more of my position, and do some research, in the comments below, and in Discussion about Animal Rights and Popper

Update 2: I wrote Animal Rights Issues Regarding Software and AGI

Messages (129)

You actually believe that a vegetable is somehow smarter than a Dolphin?

#14340 can you quote what makes you think that Elliot believes a vegetable is smarter than a dolphin?

#14348 Lad, look at the last 2 boxes.

#14348 https://imgur.com/a/eOgLNVM

Doing some more searches today about this stuff. The most relevant material I found is this crap:

http://natureinstitute.org/pub/ic/ic1/robots.htm

> What artificial intelligence researchers like about insects is their supposedly simple, rule-based intelligence. This is odd, however, since it is the human being, not the insect, who has gained the ability to think in a rule-driven manner.

and

> In the first place, no one would be so foolish as to claim that an insect obeys rules in any literal sense. Certainly there is no conscious obedience going on, nor even a conscious apprehension of rules. At most one might say that the insect has rules of behavior somehow "built in" to it, which it must follow.

The article leaves me doubting that the author can code. I didn't find any arguments worth adding to my discussion tree.

http://www.bbc.co.uk/ethics/animals/rights/rights_1.shtml

This page gives some counter arguments against animal rights, then doesn't even try to argue why they're wrong. And it mentions something I saw elsewhere too:

> The French philosopher Rene Descartes, and many others, taught that animals were no more than complicated biological robots.

So the view I'm trying to find arguments against – that animals are complicated biological robots – was known long before we had computers and long before Singer's 1973 article. So it shouldn't be too hard to find a rebuttal to it from some animal rights advocate, right? But so far I haven't found one (let alone what I really want: a rebuttal that has a modern scientific understanding of computation, physics, etc.).

I checked Singer's *Animal Liberation* book. It has no discussion of computation. It mentions robots twice:

> We also know that the nervous systems of other animals were not artificially constructed—as a robot might be artificially constructed—to mimic the pain behavior of humans. The nervous systems of animals evolved as our own did, and in fact the evolutionary history of human beings and other animals, especially mammals, did not diverge until the central features of our nervous systems were already in existence.

This doesn't make sophisticated, nuanced, detailed or scientific arguments. Yes there are evolutionary reasons (rather than artificial reasons chosen by an intelligent designer) that animals have informational signals related to physical damage, algorithmically factor this into their behavior, and also that behavior includes communication with other animals. That doesn't tell you that animals aren't robots. It doesn't tell you that they can suffer. It makes evolutionary sense without suffering. Singer continues in the next sentence by showing he doesn't think about the issues in the way a robot programmer would:

> A capacity to feel pain obviously enhances a species’ prospects of survival, since it causes members of the species to avoid sources of injury.

Getting the information and computationally processing the information is useful. That doesn't mean the animal has a mind, can suffer, feels pain in the sense that humans do, etc. Singer continues:

> It is surely unreasonable to suppose that nervous systems that are virtually identical physiologically, have a common origin and a common evolutionary function, and result in similar forms of behavior in similar circumstances should actually operate in an entirely different manner on the level of subjective feelings.

But subjective feelings are (arguably, at least, and Singer doesn't refute this) an aspect of minds not nerves.

> In theory, we could always be mistaken when we assume that other human beings feel pain. It is conceivable that one of our close friends is really a cleverly constructed robot, controlled by a brilliant scientist so as to give all the signs of feeling pain, but really no more sensitive than any other machine. We can never know, with absolute certainty, that this is not the case. But while this might present a puzzle for philosophers, none of us has the slightest real doubt that our close friends feel pain just as we do. This is an inference, but a perfectly reasonable one, based on observations of their behavior in situations in which we would feel pain, and on the fact that we have every reason to assume that our friends are beings like us, with nervous systems like ours that can be assumed to function as ours do and to produce similar feelings in similar circumstances.

This is similar to the comments in the articles that I already have in the discussion tree.

Singer on Descartes

To his credit, in his book, Singer does respond to Descartes. I haven't read the relevant Descartes material to check how accurate this is.

> Under the influence of the new and exciting science of mechanics, Descartes held that everything that consisted of matter was governed by mechanistic principles, like those that governed a clock. An obvious problem with this view was our own nature. The human body is composed of matter, and is part of the physical universe. So it would seem that human beings must also be machines, whose behavior is determined by the laws of science.

> Descartes was able to escape the unpalatable and heretical view that humans are machines by bringing in the idea of the soul. There are, Descartes said, not one but two kinds of things in the universe, things of the spirit or soul as well as things of a physical or material nature. Human beings are conscious, and consciousness cannot have its origin in matter. Descartes identified consciousness with the immortal soul, which survives the decomposition of the physical body, and asserted that the soul was specially created by God. Of all material beings, Descartes said, only human beings have a soul. (Angels and other immaterial beings have consciousness and nothing else.)

> Thus in the philosophy of Descartes the Christian doctrine that animals do not have immortal souls has the extraordinary consequence that they do not have consciousness either. They are, he said, mere machines, automata. They experience neither pleasure nor pain, nor anything else. Although they may squeal when cut with a knife, or writhe in their efforts to escape contact with a hot iron, this does not, Descartes said, mean that they feel pain in these situations. They are governed by the same principles as a clock, and if their actions are more complex than those of a clock, it is because the clock is a machine made by humans, while animals are infinitely more complex machines, made by God.[24]

> This “solution” of the problem of locating consciousness in a materialistic world seems paradoxical to us, as it did to many of Descartes’s contemporaries, but at the time it was also thought to have important advantages. It provided a reason for believing in a life after death, something which Descartes thought “of great importance” since “the idea that the souls of animals are of the same nature as our own, and that we have no more to fear or to hope for after this life than have the flies and ants” was an error that was apt to lead to immoral conduct. It also eliminated the ancient and vexing theological puzzle of why a just God would allow animals—who neither inherited Adam’s sin, nor are recompensed in an afterlife—to suffer.[25]

While my conclusions have partial overlap with Descartes (as presented here by Singer), I certainly don't agree with most of that. I'm an atheist who respects modern science. Sadly, Singer here isn't making arguments to refute a modern, scientific viewpoint with some similar claims as Descrates'.

It looks to me like Singer ought to have investigated computers more but didn't. But the primary important thing is whether any pro animal rights people have done that. I still expect that some have tried, but I haven't found that literature yet.

Paper: Why animals are not robots

This paper actually proposes three categories. It differentiates humans, animals and robots. It doesn't equate humans with animals.

What does it claim is different about animals and robots? From the full text:

> Humans are not robots, robots are not animals, and animals are not humans. Cognitively, humans, robots and animals belong to three distinct categories.[1] In contemporary discussions of expertise, the assumption, however, has been to assimilate humans-acting-as-animals and other living organisms (LOs) with machines (e.g. Collins 2010). Obviously, animals do not conform to traditional ideas of expertise (Collins 2011), so why problematize that animals are lumped together with machines in the contemporary science of expertise?

It opens by suggesting, with cites, that the literature generally groups animals with robots/machines (as I do). Its position is dissent from that.

> The complexity of organisms, the heterarchical purposeful, gravity centred organisation (in which ‘gravity’ is a metaphor for the inevitable self-sustenance), clearly demarcates organisms from machines. Robots are not qualitatively ‘the same’ as LOs, nor are they just simpler versions.

These aren't scientific or computational arguments, so they aren't what I'm looking for. There are two arguments. I'll respond to them:

1) Greater complexity is quantitative, not qualitative difference, so I disagree with that argument.

2) That's the only use of "heterarchical" in the paper and the word doesn't appear in the 8 dictionaries I checked. Wikipedia says:

> A heterarchy is a system of organization where the elements of the organization are unranked (non-hierarchical) or where they possess the potential to be ranked a number of different ways.

Robot software can do that.

I think the thing about metaphorical "gravity" means that robots and animals have different design goals. Sure but that doesn't necessarily make their physical characteristics, after being designed and created, different in relevant ways. (For simplicity, in this paragraph I treat biological evolution metaphorically as a designer.)

Project Status

I'm done searching for relevant literature for now. I'll contact some animal rights advocates, e.g. on reddit, and see what they say, especially if they can direct me to relevant literature (including blog posts, but preferably not ad hoc forum comments). I'll hold off on updating the discussion tree until after that. I don't have much to add yet anyway, probably just brief comments with two nodes (one for Singer and one for my comment). It'd be be the Singer Descartes stuff and Singer robot stuff.

When I update the discussion tree I'll keep the originals available in the post and add the new tree too. I won't replace the original tree.

Forum Posts

https://www.reddit.com/r/AnimalRights/comments/dyc77w/seeking_proanimal_rights_literature/

https://www.reddit.com/r/vegan/comments/dyccjq/seeking_proanimal_rights_literature/

https://vegtalk.org/science-philosophy-religion/seeking-pro-animal-rights-literature-t85767.html

https://www.happycow.net/forum/animal-rights/seeking-pro-animal-rights-literature/5284

Pending review at https://animalpeopleforum.org/

(Slightly edited the remark about Reddit at the end for the non-reddit sites.)

# Seeking Pro-Animal Rights Literature

I a philosopher (and programmer) attempting to research and diagram arguments relating to animal rights. I'm looking for help finding literature with certain types of pro animal rights arguments.

What I've done so far:

https://curi.us/2240-discussion-tree-state-of-animal-rights-debate

I'm skeptical of animal rights but I'm trying to deal with the issues in an objective, truth seeking way. The types of arguments I'm looking for are specified in the diagram. I want arguments that would allow me to add more nodes to the diagram.

The main thing I'm looking for are arguments relating to modern science and computation which rigorously differentiate animals from robots controlled by software. I would expect the author to be a skilled programmer who agrees that brains are computers, understands computational universality, has something substantive to say about the difference between non-AGI algorithms and intelligence, acknowledges and specifies many types of non-intelligent algorithms (e.g. A* and everything else used in current video games), and then gives some scientific documentation of specific animal behaviors and why they can't be accounted for with non-intelligent algorithms.

I've searched a bunch and haven't been able to find this so far.

Rigorous, modern, scientific arguments that brains are not computers would also be relevant. Arguments for dualism could be relevant too. Arguments against my epistemology (Critical Rationalism) could also be relevant. I'd prefer arguments which specifically relate to nodes in my discussion tree diagram.

Academic papers are fine. Books are fine. Paywalls are fine. Nothing is too technical or detailed. But those aren't requirements, e.g. serious blog posts are OK too. I'm not very interested in people writing ad hoc rebuttals in Reddit comments. If you want to debate me personally, see https://elliottemple.com/debate-policy

Ask Yourself Discord

I posted this to the Ask Yourself discord (He's a vegan YouTube debater https://www.youtube.com/channel/UCQNmHyGAKqzOT_JsVEs4eag ). I'm currently streaming on Twitch. The video will be posted to YouTube later.

I’m a non-vegan philosopher (and programmer) attempting to research and diagram arguments relating to animal rights. I'm looking for help finding literature with certain types of pro animal rights arguments. What I've done so far: https://curi.us/2240-discussion-tree-state-of-animal-rights-debate

I’m trying to proceed in an organized, objective, truth seeking way. I’m looking for pro animal rights literature (anything from serious blog posts to academic papers) making certain arguments, particularly arguments that will add additional nodes to my diagram. The type I’m most seeking is someone who is familiar with both modern science and programming arguing that animals are not like robots running software consisting of non-AGI algorithms. I found arguments like that were missing in Singer (where I started) and didn’t find anything promising after searching. Could people here help with this project?

Ask Yourself discord link: https://discord.gg/dUPFfby

I looked at the *Fellow Creatures* book a bit because someone on Ask Yourself discord replied suggesting it. It didn't seem to have anything addressing my arguments. The person somehow hadn't noticed my diagram or that I brought up computers.

Also I got banned on Ask Yourself for being too slow to begin a verbal debate. I told them I was trying to fix an audio problem and they banned me while I was doing that.

curiToday at 5:04 PM

I’m a non-vegan philosopher (and programmer) attempting to research and diagram arguments relating to animal rights. I'm looking for help finding literature with certain types of pro animal rights arguments. What I've done so far: https://curi.us/2240-discussion-tree-state-of-animal-rights-debate

I’m trying to proceed in an organized, objective, truth seeking way. I’m looking for pro animal rights literature (anything from serious blog posts to academic papers) making certain arguments, particularly arguments that will add additional nodes to my diagram. The type I’m most seeking is someone who is familiar with both modern science and programming arguing that animals are not like robots running software consisting of non-AGI algorithms. I found arguments like that were missing in Singer (where I started) and didn’t find anything promising after searching. Could people here help with this project?

Discussion Tree: State of Animal Rights Debate

I began organizing the current state of the animal rights debate into a discussion tree diagram. I started with Peter Singer because he's a well known intellectual with pro animal rights writing. I

I am looking at the book Fellow Creatures right now. It doesn't address software issues but I'm seeing what it does have.

It says e.g.:

Creatures themselves, not pleasure or pain or intrinsic values, are the source of value. Things can be good or bad at all because they are good-for or bad-for creatures.

This doesn't address the position that cows don't have values or preferences because it takes intelligent, rational thought to make value judgments, form opinions about goals, or preferences, etc. I don't yet know if that's addressed elsewhere in the book. That's the sort of thing I want an answer to.

futilegodToday at 5:19 PM

You don’t think a cow would value their child or prefer not to be killed?

curiToday at 5:19 PM

Right because I don't think cows have intelligent minds that consider things like that. I think they're like complex robots run by non-AGI software algorithms.

TempestToday at 5:22 PM

@curi What convinces you of that?

curiToday at 5:23 PM

I haven't been able to find any arguments or observed animal behavior to the contrary, and I've read things like http://curi.us/272-algorithmic-animal-behavior which scientifically observe and document the nature of animal behavior, and also, separately, i have a model of how minds and brains work related to Critical Rationalist epistemology.

Algorithmic Animal Behavior

If studied closely, animals can be seen (at least in some cases like these examples) to follow simplistic, algorithmic behavior patterns (like software where the designer didn't think about some potent

TempestToday at 5:27 PM

What would be an example of a behavior that would raise the probability that non-human animals have non-robotic minds?

curiToday at 5:28 PM

Building a space ship.

TempestToday at 5:28 PM

what would be the minimum?

curiToday at 5:29 PM

An intelligent conversation is one option, but I don't think language is required if they could show they are learning and problem solving in a way that goes beyond algorithms in their DNA and creates new knowledge.

futilegodToday at 5:30 PM

Ok Descartes lol

curiToday at 5:31 PM

I'm looking for literature refuting this. Do you know of some or just laugh without arguments?

futilegodToday at 5:31 PM

Ever seen a pig play video games?

It’s pretty lit

curiToday at 5:37 PM

I gave a similar NTT answer at http://curi.us/1169-morality#14208 I was hoping people here would have some interest in providing sources which rigorously address these matter. Don't those exist?

Stream of going to the Ask Yourself discord:

https://www.youtube.com/watch?v=6dYjSEPQf20&list=PLKx6lO5RmaeuXinlXLiZtm16HNE20KPdL

At https://www.reddit.com/r/vegan/comments/dyccjq/seeking_proanimal_rights_literature/

I was directed to the following claim (quote 5 from the menu):

http://www.scdesign.ie/ar_results1.php

Tom Regan in his book *The Case for Animal Rights*:

> Given the survival value of consciousness ... one must expect that it would be present in many species, not in the human species only.

What survival value? What a circumstantial evidence type argument. And having a mind capable of designing and build skyscrapers has survival value but it doesn't mean it's present in any other species. So it's ridiculous.

The other quotes I was advised to read also lack a modern scientific understanding, let alone an understanding of computer algorithms. They don't address the issues I raised.

I was also told that the issues I raised are unnecessary to address and that's why there is no literature addressing them. That is: it doesn't matter if a dog is like a complicated Roomba that can't suffer, the case for animal rights works anyway. I don't think he really thought through or meant what he said, he was just being dumb.

Hi Mr Curi

"Creatures themselves, not pleasure or pain or intrinsic values, are the source of value. Things can be good or bad at all because they are good-for or bad-for creatures.

This doesn't address the position that cows don't have values or preferences because it takes intelligent, rational thought to make value judgments, form opinions about goals, or preferences, etc. I don't yet know if that's addressed elsewhere in the book. That's the sort of thing I want an answer to."

Are values or preferences required to experience suffering? Assuming the cow has a capacity to suffer isn't the relevant moral quality the degree of suffering? Why does the cow need to have the conscious knowledge that it would prefer not to suffer?

Why does a cow need to be differentiated from a software algorithm in order to validate its suffering?

#14391

> Are values or preferences required to experience suffering?

I think so.

If you don't think so, tell me what you think the requirement is for suffering. Do you agree a Roomba, self-driving car or AlphaGo doesn't suffer? If so, you should figure out what you think is relevantly different about them and a cow. Preferably you should approach that difference(s) in a technical way involving science and computer code.

CritRat asked my question

https://www.reddit.com/r/DebateAVegan/comments/dz3s9q/seeking_proanimal_rights_literature/

https://www.reddit.com/r/askphilosophy/comments/dz3xfc/seeking_proanimal_rights_literature/

https://www.reddit.com/r/vegan/comments/dyi0qm/random_thoughts_small_questions_and_general/f8375sx/

So far no relevant replies with sources. A few replies from people who know nothing about software and don't understand the question. A few references to literature or a video which do not answer the question (multiple times I've seen people *know* they are giving a non-answer, and mention it, before giving it anyway, which I find weird).

CritRat thinks (said on Discord) it's understandable that they don't get it because they don't know about Critical Rationalism. I think widespread knowledge about software and AGI should be adequate to see there's an important question here even without being able to code (it's hard to answer the question without being able to code, but I think a non-coder can understand it fine). CritRat is not a coder btw. I will write a blog post explaining that.

I Made a Video About This

https://www.youtube.com/watch?v=0WJBvtjUAOo

Two comments by me

https://www.reddit.com/r/vegan/comments/dyccjq/seeking_proanimal_rights_literature/f85sjxo/

> If cows are like self-driving cars, then cows are not conscious. Where is the animal rights and/or liberation literature addressing this?

> Your claim that consciousness is not necessarily computational is, in my opinion, superstitious mysticism that is ignorant of modern science and software. I think your claim that consciousness may be a fundamental constituent of the universe is both a rejection of the knowledge and attitudes of modern physics, plus it still hasn't provided an argument that any animal is conscious or given any criteria for deciding what is conscious.

> If you can refer me to literature arguing this stuff in a way that could conceivably persuade a person familiar with science and software (and philosophy), with a perspective like mine (rather than ignoring and not addressing my materialist, non-dualist perspective), please do. If there are good arguments I don't know about, and this isn't as anti-scientific as it sounds to me, I'd like to find out.

https://www.reddit.com/r/askphilosophy/comments/dz3xfc/seeking_proanimal_rights_literature/f85ofw5/

> Not all software is the same. E.g. some has/is general intelligence and some doesn't/isn't. Do you agree that Roombas can't and don't suffer or think? Then it must require some other type of software, that a Roomba doesn't have, to merit moral consideration, right? So what type is that and do animals have it? People in favor of animal rights should consider and write about that.

More Places To Ask

I haven't asked in these places. I'm recommending others try if interested.

https://www.quora.com/topic/Animal-Rights

https://vegetarianism.stackexchange.com

https://pets.stackexchange.com

https://philosophy.stackexchange.com

"Do you agree a Roomba, self-driving car or AlphaGo doesn't suffer?"

I agree that a Roomba or self driving car doesn't suffer.

"If so, you should figure out what you think is relevantly different about them and a cow."

A cow has a central nervous system that transmits pain signals to the brain. The brain in turn triggers the pain sensation in the relevant nerves. Pain would be classified as physical suffering.

A Roomba has a nervous system in the sense that it has sensors which communicate feedback to a processing unit. Example, the groud ahead is dark; turn off the motor and initiate rotation until the groud is no longer dark. It does not transmit pain signals or receive the pain sensation from its processing unit. Since it does not feel pain we can safely assume it doesn't experience physical suffering.

#14397 A central nervous system could be put in a robot. That is a matter of the specific physical mechanism that sends sensor data from the sensors to the CPU/brain. The information sent can be the same for a wide variety of mechanisms. Wires with no spine works fine fine. I don't see how that's a relevant characteristic. The mechanism of transmitting information within the entity doesn't tell us what information process (aka computation) happens when the information is processed in the CPU/brain or the nature of that processing.

In other words, you aren't differentiating different types of informational signals. You call some "pain" signals but how do they physically/computationally/scientifically differ from other informational signals giving sensor readings, including e.g. sensor data about damage or bad things (e.g. deflated tire or scrape or hole in side of car). I think animals have nerves that detect some types of damage because that information is has useful survival value when factored into some behavior determining algorithms, not because any mental feeling is involved, so it's essentially equivalent to what a self-driving car would have.

https://www.reddit.com/r/vegan/comments/dyccjq/seeking_proanimal_rights_literature/f85vpbq/?context=3

> That argument is bad. Humans clearly (maybe you disagree!?) have some sort of mental capabilities which have positive survival which are not shared by other any animals, e.g. the ability to invent concrete and build skyscrapers, to invent and build space ships, to research and use sophisticated medicines, etc. The fact that something has survival value doesn't mean you can assume other species have it.

>

> And are you saying there are no animal rights advocates who know enough to differentiate humans from robots using a scientific and software-oriented approach? That seems damning.

https://www.reddit.com/r/vegan/comments/dyccjq/seeking_proanimal_rights_literature/f85xghx/?context=3

> Information is physical, see modern physics, e.g. https://arxiv.org/pdf/quant-ph/0104033.pdf

> I'm asking for a sophisticated comparison involving animals from someone who actually understands software algorithms and can tell the difference between behavior that is similar to a human and behavior that is similar to a Roomba, chess engine, self-driving car, etc.

> I'm not being arrogant, I'm here asking for literature to read which argues against my views. So far I haven't gotten any relevant response from anyone which addresses the issues and arguments i've brought up relating to computation.

I found some relevant literature

https://reducing-suffering.org/do-video-game-characters-matter-morally/

https://www.reddit.com/r/vegan/comments/dyccjq/seeking_proanimal_rights_literature/f8615bj/?context=3

> I think all humans (with exceptions like braindead people on life support) have *general intelligence* (the thing that Artificial General Intelligence researchers are trying to write software for). This cognitively differentiates humans from any animal.

> I specifically said your view of information being non-physical is contradicted by modern physics and gave a physics paper as a source. I don't see what's arrogant about this claim.

Question on philosophy stack exchange

#14393 https://philosophy.stackexchange.com/questions/68549/where-can-i-find-arguments-for-animal-rights

Hi Mr Curi

"A central nervous system could be put in a robot."

How is the system of sensors in a robot right now different from a central nervous system?

"information process (aka computation) happens when the information is processed in the CPU/brain or the nature of that processing."

This is true. The mind of an animal will always be a black box that only allows us to observe inputs and output.

"You call some "pain" signals but how do they physically/computationally/scientifically differ from other informational signals giving sensor readings"

They don't. A signal is just electrical energy being transmitted from a sensor to a processing unit. It could be a nerve ending and a brain or an electrical sensor and a CPU. Of course the information encoded in that signal will trigger different reactions from processing unit.

A "pain" signal would tell the processing unit to trigger a physical response in the affected area. We observe the effect of this physical response in the behaviour of the animal.

An animal has the capability to feel these painful physical responses and thus can experience physical suffering. No robots that I am aware of replicate this experience.

Of course we cannot experience what the animal experiences and thus we cannot "know" for certain whether they felt pain or not. If you place the burden of proof on me to show that animals feel pain I cannot satisfy that burden. I would ask why you think that two animals with remarkably similar mechanisms for delivering pain would experience that pain in completely different ways. Also my understanding is that the scientific consensus is that animals do feel pain. Do you have any arguments refuting their work other than "experience is subjective and we can't know for sure what animals feel".

"because that information is has useful survival value when factored into some behavior determining algorithms, not because any mental feeling is involved, so it's essentially equivalent to what a self-driving car would have."

This is your opinion though. There is strong evidence that all vertebrates experience.

https://www.ncbi.nlm.nih.gov/books/NBK32655/

"Instead, the consensus of the committee is that all vertebrates should be considered capable of experiencing pain. This judgment is based on the following two premises: (1) the strong likelihood that this is correct, particularly for mammals and birds (Box 1-4 provides compelling evidence for rats, for example); and (2) the consequences of being wrong, that is, acting on the assumption that all vertebrates are not able to experience pain and so treating pain as though it were merely nociception, an error with obvious and serious ethical implications."

So clearly the safer moral position is to assume that animals can feel pain. The issue with your position that I see is that you must agree it is ethical to beat, torture and abuse a puppy as it might or might not be able to feel pain. If you believe this is true I would be curious to know how much time you've spent with animals.

I would also add that so far we have only focused on physical suffering and ignored the other differences between robots and non-human animals. For example stress hormones being released which cause mental suffering. Obviously no such hormones exist in a robot.

> An animal has the capability to feel these painful physical responses and thus can experience physical suffering. No robots that I am aware of replicate this experience.

Where are the arguments regarding what experiencing suffering is in computational terms, and why animals can do it but no current robots can? What physical or algorithmic trait makes the difference? Got any literature which addresses the issues I'm trying to ask about?

Your link does not address try to the computation issues. It leaves the same big hole in its reasoning that Singer did.

Hi Mr Curi

>Where are the arguments regarding what experiencing suffering is in computational terms, and why animals can do it but no current robots can?

Where are the arguments regarding what experiencing suffering is in computational terms, and why humans can do it but no current robots can?

I think we could analyze these arguments and then check to see if the same mechanisms for pain delivery exist in non-human animals.

If the process whereby a human feels pain is the same process whereby a non-human animal feels pain I would ask you what leads you to believe that pain would be experienced differently?

The reason robots cannot currently feel pain is because unlike humans and non-human animals they do not have regions of their processing units that are activated by noxious stimuli. These regions in the brain are responsible for the experience of pain. As far as we know these mechanisms work in the same way for human and non-human animals. Do you have any evidence using neuro-imaging that non-human animal brains aren't activated when they undergo noxious stimuli? Without that evidence I'm not sure why you would come to a conclusion that animals don't feel pain.

Hi Mr Curi

>CritRat thinks (said on Discord) it's understandable that they don't get it because they don't know about Critical Rationalism.

I would also add that I'm quite sure people on the debate a vegan subreddit are familiar with critical rationalism.

Just as you have rules for engaging in debate we have rules for responding to posts. Since you put no effort into your post and didn't cohesively make any specific argument I'm not surprised people aren't wiling to put effort into their responses.

If you have a specific argument to bring around animal rights please post it and I'm sure you'll receive lots of strong discussion.

> Since you put no effort into your post and didn't cohesively make any specific argument I'm not surprised people aren't wiling to put effort into their responses.

How is creating a diagram of arguments, and doing quite a bit of research (see the comments above), "no effort"? You're being unreasonable and hostile.

Also FYI you're apparently from some particular place where I asked a question but you haven't told me which one.

#14408

Check out my new post:

https://curi.us/2246-animal-rights-issues-regarding-software-and-agi

I was referred to and looked through the book, but wasn't given any specific passages or quotes.

https://www.amazon.com/Death-Ethic-Life-John-Basl-ebook/dp/B07N94DQ16/

I didn't find in it the sorts of arguments I'm looking for. It doesn't analyze stuff like what algorithms animals might be using or couldn't be using. It doesn't address my scientific, computational worldview. If you think I missed the arguments, please point me to them more specifically.

---

Also, someone brought up that there are critics of the idea that brains are computers. Yeah but I don't know any good ones. If you know some you think are good, rather than ignorant or unscientific, please share.

BobSeger1945 said this to me:

https://www.reddit.com/r/vegan/comments/dyccjq/seeking_proanimal_rights_literature/f86cyrk/?context=3

> I strongly doubt it. But the most popular computational theory of consciousness is IIT, and it's leading proponent (Koch) believes animals are conscious, and he has written a lot about how we can measure consciousness (using NCC's). So I recommend you look there.

He doubts any animal rights advocates have addressed the issues I raise.

IIT is https://en.wikipedia.org/wiki/Integrated_information_theory

BobSeger1945 also said:

> What you need to reject is the immature view that dualism is religion or mysticism. Many scientists have embraced property dualism, which has nothing to do with religion, and is totally compatible with physics. It proposes that consciousness is a property of matter (like mass and charge).

Scientists have embraced dualism so now it's OK!? Uh, no, it's still trash philosophy and an unscientific attempt to ignore physics and evidence and make crap up. It's as mystical as saying maybe God, ghosts, ESP, astrology or Heaven is a property of matter like mass or charge. And he linked wikipedia instead of some sort of argument that would explain why it's not mysticism to a person with a materialist view, as I'd just requested.

I will look at ITT more. I will not look at dualism more unless someone gives specific arguments and sources they think are good and which actually address my worldview.

Hi Mr Curi

5) Argue your position (submissions only). Your submission should be supported by some argument (written in your own words), and sources if possible.

>How is creating a diagram of arguments, and doing quite a bit of research (see the comments above), "no effort"? You're being unreasonable and hostile.

Not trying to be hostile, just pointing out you didn't put forward a specific argument and you didn't make the post yourself.

I did mention that I came from the DebateAVegan subreddit. I think posting a link to your blog post there would be very well received.

I will also point out I haven't read The Beginning of Infinity.

Now let's look at your argument on suffering:

"The reason I favor slaughtering cows is that I have no significant doubt about whether a cow has general intelligence."

P1: If a being doesn't have general intelligence it cannot experience suffering.

P2: A cow doesn't have general intelligence.

C: A cow cannot experience suffering.

I would ask you to provide proof for your P1.

Also not sure if you had any comment on my post above:

"Patrick B at 3:32 PM on November 20, 2019 | #14408"

from https://reducing-suffering.org/do-video-game-characters-matter-morally

In the Summary section he lays out his basic claim:

> The difference between non-player characters (NPCs)a in video games and animals in real life is a matter of degree rather than kind. NPCs and animals are both fundamentally agents that emerge from a complicated collection of simple physical operations, and the main distinction between NPCs and animals is one of cognitive and affective complexity. Thus, if we care a lot about animals, we may care a tiny bit about game NPCs, at least the more elaborate versions. I think even present-day NPCs collectively have some ethical significance, though they don't rank near the top of ethical issues in our current world. However, as the sophistication and number of NPCs grow, our ethical obligations toward video-game characters may become an urgent moral topic.

In the section on Goal Directed Behavior and Sentience you can get some more sense of where he's coming from:

> As I've argued elsewhere, sentience is not a binary property but can be seen with varying degrees of clarity in a variety of systems. We can interpret video-game characters as having the barest rudiments of consciousness, such as when they reflect on their own state variables ("self-awareness"), report on state variables to make decisions in other parts of their program ("information broadcasting"), and select among possible actions to best achieve a goal ("imagination, planning, and decision making"). Granted, these procedures are vastly simpler than what happens in animals, but a faint outline is there. If human sentience is a boulder, present-day video-game characters might be a grain of sand.

he has links for his sentience-is-not-binary stuff btw.

I skimmed briefly and struggled to find any clear, unhedged bottom line conclusions. He seems to rely on some Dennett stuff about "stance levels" a lot btw. But the piece, while long, sorta had the tone of thinking out loud and considering some ideas rather than trying to get to a clear cut answer.

E.g.:

> NPCs typically come into existence for a few seconds and then are injured to the point of death. It's good that the dumb masses of NPC enemies like Goombas may not be terribly ethically problematic anyway, and the more intelligent "boss-level" enemies that display sophisticated response behavior and require multiple injuries to be killed are rarer. Due to the inordinate amounts of carnage in video games, game NPCs may be many times more significant than, say, a small plant per second. Perhaps a few highly elaborate NPCs approach the significance of the dumbest insects.

"may not be terribly ethically problematic" is a very hedged statement.

Or there is this:

> If human sentience is a boulder, present-day video-game characters might be a grain of sand.

This weight/size metaphor stuff is very vague and useless as a guide to action/judgment.

It also seemed like he was trying to jump around and deal with a lot of issues at once rather than focus his discussion carefully.

this is based on my looking at a pretty long piece for a very few minutes. It's not a very thorough or "fair" treatment at all. Still thought it would be worth sharing. The piece does not look promising to me. The fact that his view rests on some kind of continuum-of-sentience perspective seems the most problematic from what I read.

> I did mention that I came from the DebateAVegan subreddit.

When, where?

> you didn't put forward a specific argument and you didn't make the post yourself.

You're accusing me of not doing something in a post that you know I didn't write.

> I would ask you to provide proof for your P1.

See https://curi.us/2246-animal-rights-issues-regarding-software-and-agi

Or provide some alternative theory of suffering to mine (from a scientific and computational perspective, not vague assertions).

Read about ITT. Looks like junk that doesn't have arguments to refute any of my views and has no clue about CR and doesn't seem to know or say much about computation or physics either. If anyone knows that I'm missing something, please refer me to a specific source.

>When, where?

"I would also add that I'm quite sure people on the debate a vegan subreddit are familiar with critical rationalism."

>You're accusing me of not doing something in a post that you know I didn't write.

Then your post shouldn't have been made. That being said I'm glad it was because now we're talking.

"I think capacity to suffer is related to general intelligence because suffering involves making value judgments like not wanting a particular outcome or thinking something is bad. Suffering involves having preferences/wants which you then don’t get. I don’t think it’s possible without the ability to consider alternatives and make value judgments about which you prefer, which requires creative thought and the ability to create new knowledge, think of new things. This is a very brief argument which I’m not going to elaborate on here."

P1: If a being cannot make value judgements it cannot experience suffering.

P2: A cow cannot make value judgements.

C: A cow cannot experience suffering.

Again, I would ask you for proof of your P1. Or feel free to expand if you feel I misunderstand your argument.

If a person becomes senile and no longer has the capacity to make value judgements would it be ethical to torture them?

https://www.ncbi.nlm.nih.gov/books/NBK32655/

Looks like this article on the physical mechanisms of pain fails to distinguish physical pain mechanisms (information signals and processing) from suffering and mental states (which it doesn't know about).

It's failing at the basic task of differentiating the stuff it's saying from robots like self-driving cars in any important way.

> "I would also add that I'm quite sure people on the debate a vegan subreddit are familiar with critical rationalism."

That doesn't say where you came from. You're not good enough at logic to debate productively. It's also an odd assertion given how rare CR knowledge is.

> Then your post shouldn't have been made.

*It is not my post.* Which part are you not understanding?

> If a person becomes senile and no longer has the capacity to make value judgements would it be ethical to torture them?

Senile people can make value judgments. I already referred you to my post which addresses your issue about P1 and you just ignored me and repeated yourself. I've lost interest. See https://elliottemple.com/debate-policy

#14419

>It's failing at the basic task of differentiating the stuff it's saying from robots like self-driving cars in any important way.

There's no reason that robots couldn't be developed that had the same mechanisms to feel physical pain that animals do. It's just that none have that capability right now.

A robot cannot experience pain because it has no part of its brain responsible for the sensation of pain. Both humans and non-human animals have these capabilities built into their nervous systems.

> There's no reason that robots couldn't be developed that had the same mechanisms to feel physical pain that animals do. It's just that none have that capability right now.

You're failing to differentiate animals from robots that exist right now. Robots and cows both have sensors which send information to the brain/CPU where software algorithms process it. Like the link, you aren't saying anything to address this.

#14420

>You're not good enough at logic to debate productively.

Resorting to personal attacks is not very productive in my experience.

>*It is not my post.* Which part are you not understanding?

Well it was posted on your behalf and I assumed you had requested it be posted.

>Senile people can make value judgments.

I don't see a source for this in your blog post. I would happily read over such a source if you could provide it.

>I already referred you to my post which addresses your issue about P1 and you just ignored me and repeated yourself.

I referenced your blog post here:

"I think capacity to suffer is related to general intelligence because suffering involves making value judgments like not wanting a particular outcome or thinking something is bad. Suffering involves having preferences/wants which you then don’t get. I don’t think it’s possible without the ability to consider alternatives and make value judgments about which you prefer, which requires creative thought and the ability to create new knowledge, think of new things. This is a very brief argument which I’m not going to elaborate on here."

And I rewrote my version of your argument to include value judgements instead of general intelligence:

P1: If a being cannot make value judgements it cannot experience suffering.

P2: A cow cannot make value judgements.

C: A cow cannot experience suffering.

Can you provide me with a logical argument that proves P1? I'm not interested in trying to peruse your entire blog post and imply the proof myself. If you can't provide a logical proof then I'm not sure why anyone would accept your conclusion as valid.

>I've lost interest.

That's a shame. If you regain interest in proving your ideas feel free to respond.

#14422

>You're failing to differentiate animals from robots that exist right now.

I addressed this in an earlier post to which you haven't responded.

See below:

>Where are the arguments regarding what experiencing suffering is in computational terms, and why animals can do it but no current robots can?

Where are the arguments regarding what experiencing suffering is in computational terms, and why humans can do it but no current robots can?

I think we could analyze these arguments and then check to see if the same mechanisms for pain delivery exist in non-human animals.

If the process whereby a human feels pain is the same process whereby a non-human animal feels pain I would ask you what leads you to believe that pain would be experienced differently?

The reason robots cannot currently feel pain is because unlike humans and non-human animals they do not have regions of their processing units that are activated by noxious stimuli. These regions in the brain are responsible for the experience of pain. As far as we know these mechanisms work in the same way for human and non-human animals. Do you have any evidence using neuro-imaging that non-human animal brains aren't activated when they undergo noxious stimuli? Without that evidence I'm not sure why you would come to a conclusion that animals don't feel pain.

Hi Mr Curi

Just wanted to add I forgot to add my name on the two above posts (14424) ( 14423). Sorry for the confusion.

#14415 So he thinks not merely roombas but microwaves and parking meters are a little bit conscious. But he has no clear reasoning (i checked both links re "sentience is not a binary property"). And what about how everything does information processing at all times? If a photon hits a rock and bounces off, some physical variables change in accordance to some math. It physically constitutes a computation of some sort. So rocks and photons colliding is a little bit of consciousness? Working out an arithmetic problem on a piece of paper makes the paper and pen a little bit conscious? He hasn't thought through problems like these and I'm not even sure if he can code or tried to research physics and the physical aspects of computation.

#14423 Pointing out your logic errors isn't a personal attack. You didn't even have the integrity to admit you were wrong. If you think I'm mistaken to judge you as being not worth talking with, and judging you as someone unfamiliar with logic, computers, science, epistemology, etc., see https://elliottemple.com/debate-policy

> I'm not interested in trying to peruse your entire blog post and imply the proof myself.

You literally won't read my arguments about this but you're complaining that I no longer want to write new things for you!?

#14427

>Pointing out your logic errors isn't a personal attack

"You're not good enough at logic to debate productively."

I guess we have different definitions of personal attack. I assumed that if I mentioned a community your associate had posted in at your request you would assume I came from that community.

Since this portion of our discussion had nothing to do with a formal debate I don't see the issue with my assumption.

>If you think I'm mistaken to judge you as being not worth talking with, and judging you as someone unfamiliar with logic, computers, science, epistemology, etc., see https://elliottemple.com/debate-policy

Why would I go through the effort of "proving" my worth to you if you can't provide a simple logical proof behind your reasoning?

>You literally won't read my arguments about

I did read your argument and in fact I pasted the relevant sections and rewrote them into syllogisms for you:

P1: If a being cannot make value judgements it cannot experience suffering.

P2: A cow cannot make value judgements.

C: A cow cannot experience suffering.

I can promise you there was no clear logical proof for P1 in your blog post. If your argument is solid why won't you provide a simple logical proof?

#14430 Patrick, you aren't a programmer, aren't a scientist of a relevant type, don't know about AGI, don't know about the physics of computation, aren't a logician, aren't a philosopher, have no important expertise to bring to the discussion, and also aren't listening to the requests for literature from people who understand what they're talking about. Am I wrong about any of this?

curi has some expertise at all of those things and has already talked ~endlessly with people who need to be taught the entire fields step by step but are trying to argue instead of learn. That's why he doesn't want to talk to you more. But he still gave you a second chance via his debate policy so that, if he's wrong, it's possible for his error to be corrected.

Hi Mr Curi

#14431

>Patrick, you aren't a programmer, aren't a scientist of a relevant type, don't know about AGI, don't know about the physics of computation, aren't a logician, aren't a philosopher, have no important expertise to bring to the discussion, and also aren't listening to the requests for literature from people who understand what they're talking about. Am I wrong about any of this?

I am a programmer and have studied logic and philosophy in university. I admit that I am no leading expert in these fields.

Im sure if curi is so intimately familiar with all of these topics he could easily provide the proof I requested. Reading his blog reveals there is no such proof:

>I think capacity to suffer is related to general intelligence because suffering involves making value judgments like not wanting a particular outcome or thinking something is bad.

Thinking something is true and having a logical proof that it is true is very different.

I will gladly await clarification or proof from mr curi.

I posted:

https://www.quora.com/unanswered/Is-there-scientifically-documented-behavior-of-any-nonhuman-animal-which-is-incompatible-with-animals-being-robots-running-non-AGI-software-algorithms-like-those-used-in-video-games-and-self-driving-cars

https://www.reddit.com/r/DebateAVegan/comments/dzb2ge/animal_rights_issues_regarding_software_and_agi/

https://www.reddit.com/r/DebateAVegan/comments/dzbzbt/animal_behaviors_that_couldnt_be_robotic/

https://vegetarianism.stackexchange.com/questions/2034/non-robotic-animal-behaviors

I will post to the pets stack exchange in 40 minutes when it allows me to post a second time.

I wrote some new text that I used:

---

Can anyone point me to written arguments which point out any documented behavior of any animal which is incompatible with an animals-as-robots model? The model I mean claims animals lack the general intelligence humans have and aren't able to learn anything that goes beyond the knoweldge already in their genes. A good argument would explain why the animal behavior cannot be accounted for by software algorithms like the non-AGI algorithms we know how to program today and use in video games, self-driving cars, computer vision, etc.

If not, then I will conclude that there is no *evidence* that animals suffer any more than self-driving cars do (zero).

See also:

https://curi.us/2240-discussion-tree-state-of-animal-rights-debate

https://curi.us/2245-discussion-about-animal-rights-and-popper

https://curi.us/2246-animal-rights-issues-regarding-software-and-agi

https://curi.us/272-algorithmic-animal-behavior

---

The Quora version had to be extra short:

Is there scientifically documented behavior of any nonhuman animal which is incompatible with animals being robots running non-AGI software algorithms like those used in video games and self-driving cars?

Got linked to Science, Sentience, and Animal Welfare. But it doesn't discuss intelligence, computation, AGI, algorithms, etc.

#14433

>A good argument would explain why the animal behavior cannot be accounted for by software algorithms like the non-AGI algorithms we know how to program today and use in video games, self-driving cars, computer vision, etc

I concede your point that animal behaviour can be perfectly accounted for with non-AGI algorithms.

Now I would ask you to provide evidence that proves this means animals can't suffer.

"P1: If a being's behaviour cannot be differentiated from a non-AGI algorithm it cannot experience suffering.

P2: A cow's behaviour cannot be differentiated from a non-AGI algorithm.

C: A cow cannot experience suffering."

You keep asking people to prove something when in fact you have proven absolutely nothing. And please don't refer to your blog post again:

>I think capacity to suffer is related to general intelligence because suffering involves making value judgments like not wanting a particular outcome or thinking something is bad.

Your opinions alone are not logical evidence that proves your argument.

If you cannot provide a proof for P1 or structure your syllogism in a different form and then provide the proof you need, why should anyone accept your conclusions?

At this point you have spent a lot of time researching and asking questions but you haven't applied any logical rigour to your arguments. I would suggest proving your argument before asking for feedback that disproves your argument.

> I concede your point that animal behaviour can be perfectly accounted for with non-AGI algorithms.

Will you also concede that human behavior cannot be accounted for in that way?

Hi Mr Curi

#14438

>Will you also concede that human behavior cannot be accounted for in that way?

Yes, by defintion an AGI algorithm is capable of learning and understanding the same tasks as a human being.

If a non-AGI could learn and understand the same tasks as a human being it wouldn't be a non-AGI.

> If a being's behaviour cannot be differentiated from a non-AGI algorithm it cannot experience suffering.

Suffering is related to preferring or wanting X and getting not-X. It requires judgments like disliking. Not mere information or math but some opinion on those things.

What else would suffering me related to other than not getting what you want or getting what you don't want? But e.g. self-driving cars do not want anything and have no opinions.

Sometimes people enjoy physical pain. This shows humans form their own opinions and it shows that information/data like that is open to intelligent interpretation. Animals don't do that stuff.

Hi Mr Curi

#14441

>Suffering is related to preferring or wanting X and getting not-X. It requires judgments like disliking.

That's a valid opinion. Do you have any logical proof or source to support it?

>What else would suffering me related to other than not getting what you want or getting what you don't want?

A simple example would be physical suffering. You can experience pain without consciously desiring the pain to stop. Another example would be the natural stress response. Your brain experiences stress due to hormones that are released, it doesn't necessarily relate to you wanting or not wanting something.

>Sometimes people enjoy physical pain.

So they can get enjoyment out of physical suffering. Why is it relevant if animals can or cannot do the same?

#14444 Do you have any alternative view that you think is better? Asking for proof is bad epistemology.

And you're mixing up physical pain (information sent from nerves) with mental suffering.

#14445

>Do you have any alternative view that you think is better?

Let's focus on your beliefs and arguments for now. I'm not the one putting forth an argument that isn't logically valid.

>It requires judgments like disliking.

>Asking for proof is bad epistemology.

Asking for proof of your assertion is bad epistemology? How can I distinguish your opinions from facts if you have no proof for your opinions?

How can you show that suffering requires judgement like disliking? So far I can tell it is your opinion that suffering requires judgement and that's all.

#14446 Logical proof isn't the standard here, and the proper standard is the *best* known idea. If you have no alternatives, then this idea wins by default.

You need to learn epistemology. See Popper and Deutsch to begin with. http://fallibleideas.com/books#deutsch

Hi Mr Curi

>If you have no alternatives, then this idea wins by default.

Ok feel free to compare to this idea.

Humans and non-human animals have parts of their brain that are activated in response to noxious stimuli. This can be observed with neuro-imaging technology and is accepted scientific consensus. These parts of the brain are responsible for the subjective experience of suffering. Again, accepted scientific consensus.

A non-AGI has no such part of its brain and as such it does not experience physical suffering.

I do not see any reason why either the human or non-human animal need to dislike the concept of pain in order to experience pain. The fact that they experience pain is enough to constitute physical suffering.

I would then ask you for proof that preference is required in order to experience physical pain. Failing to provide such proof means I will stick with the accepted scientific consensus.

We could go into mental suffering but if you can't prove physical suffering it's not relevant at this point.

#14448 You aren't taking into account the hardware independence of computation.

You also seem to have unconceded what you already conceded?

> I concede your point that animal behaviour can be perfectly accounted for with non-AGI algorithms.

But now:

> A non-AGI has no such part of its brain and as such it does not experience physical suffering.

So you seem to be denying animals are non-AGIs, cuz you think animals suffer?

Hi Mr Curi

>You aren't taking into account the hardware independence of computation.

I agree that computation is independent of hardware.

> I concede your point that animal behaviour can be perfectly accounted for with non-AGI algorithms.

I agree that non-human animal behaviour can be modeled by a non-AGI algorithm. The physical and mental experiences that a cow has cannot be experienced by that algorithm.

Just as human behaviour can be modeled by an AGI algorithm. However the physical and mental experiences that a human experiences cannot be experienced by that algorithm.

The reason for this is that a "Roomba" has a cpu to process instructions. It's capable of a limited number of states determined by its hardware. Physically it can process 1 or 0. It can move those values from a short term location like RAM and into a processing unit. It can store other values in long term storage. It cannot emulate the regions of animal brains that are responsible for the physical sensation of pain. It simply wasn't designed to have this capability.

Human and non-human animal brains both have this capability. This is demonstrable through the use of neuro-imaging software and is in fact the scientific consensus.

>So you seem to be denying animals are non-AGIs, cuz you think animals suffer?

The scientific consensus is that non-human animals suffer. I only defer to the experts in the field.

My reply re _The Routledge Handbook of Philosophy of Animal Minds_

https://www.reddit.com/r/DebateAVegan/comments/dz3s9q/seeking_proanimal_rights_literature/f86mjff/

Thanks for the link. It seems to largely not address the issues I brought up[1], but does have a few parts agreeing with me (not conclusively), e.g. it mentions an ant vision algorithm and has this, emphasis added:

> An analogous estimation problem arises in robotics, where it is called the simultaneous locali- zation and mapping (SLAM) problem. An autonomous navigating robot must estimate its own position along with the positions of salient landmarks.The most successful robotics solution is grounded in Bayesian decision theory, a mathematical theory of reasoning and decision-making under uncertainty. On a Bayesian approach, the robot maintains a probability distribution over possible maps of the environment, using self-motion cues and sensory input to update prob- abilities as it travels through space. Bayesian robotic navigation algorithms have achieved notable success (Thrun et al. 2005). Given how well Bayesian solutions to SLAM work within robotics, *it is natural to conjecture that some animals use Bayesian inference when navigating* (Gallistel 2008; Rescorla 2009). Scientists have recently begun offering Bayesian models of animal naviga- tion (Cheng et al. 2007; Cheung et al. 2012; Madl et al. 2014; Madl et al. 2016; Penny et al. 2013). The models look promising, although this research program is still in its infancy.

[1] search results:

algorithm: 4

hardware: 1

software: 0

Turing: 0

hardware independence: 0

AGI: 0

artificial: 7 (the only one about artificial intelligence was someone's bio saying they'd studied AI)

i checked all the results that came up.

#14450

> I agree that computation is independent of hardware.

But you said:

> Humans and non-human animals have parts of their brain that are activated in response to noxious stimuli.

Talking about specific hardware features seems to be disagreeing about hardware independence. Unless you mean that this brain activation is non-computational!?

> The reason for this is that a "Roomba" has a cpu to process instructions. It's capable of a limited number of states determined by its hardware. Physically it can process 1 or 0. It can move those values from a short term location like RAM and into a processing unit. It can store other values in long term storage. It cannot emulate the regions of animal brains that are responsible for the physical sensation of pain. It simply wasn't designed to have this capability.

> Human and non-human animal brains both have this capability. This is demonstrable through the use of neuro-imaging software and is in fact the scientific consensus.

You are again contradicting hardware independence.

> However the physical and mental experiences that a human experiences cannot be experienced by that algorithm.

Are you advocating dualism, non-computational thinking, or what?

> The scientific consensus is that non-human animals suffer. I only defer to the experts in the field.

If you don't want to consider the issues yourself, why didn't you just say so? Also you were repeatedly asked for expert sources and wanted to argue instead...

Hi Mr Curi

#14452

>Talking about specific hardware features seems to be disagreeing about hardware independence. Unless you mean that this brain activation is non-computational!?

Certainly not my friend. However I am not aware of any algorithm that currently accurately models the physical experience of pain. If such an algorithm did exist it may not be possible to run in a binary system, like a Roomba. I'm not aware of any evidence that shows that the physical reactions in the brain operate at an entirely binary level and I'm not sure why we would make such an assumption, given that we know other models are possible.

>You are again contradicting hardware independence.

Binary hardware can compute binary data or instructions. Any binary computation can be done independent of which binary hardware it runs on. It cannot run ternary code or quantum code. This is a physical limitation of the hardware.

>If you don't want to consider the issues yourself, why didn't you just say so?

I have my personal opinion based on my own experiences with animals. I do not have any kind of rigorous experimentations or evidence as the scientific community does. Unless you have some experimental evidence that refutes their claims then I'm not sure why we would assume your idea that goes against the scientific consensus is the "best" idea.

#14454 Please stop referring to the anonymous guy you're talking with as "Hi Mr Curi"

Hi Mr Curi

#14455

Sorry, the title was autofilling from my previous posts. Do you have any comment on the post?

> Binary hardware can compute binary data or instructions. Any binary computation can be done independent of which binary hardware it runs on. It cannot run ternary code or quantum code. This is a physical limitation of the hardware.

You don't know what you're talking about. A binary computer can run an interpreter for ternary code or simulate a ternary computer. There is nothing that can be calculated in ternary code but not binary, nor vice versa. This is uncontroversial. You're simply ignorant of the topics you're making claims about. You need to read some books, study and learn.

And classical computers, such as brains, are not quantum computers.

> I do not have any kind of rigorous experimentations or evidence as the scientific community does. Unless you have some experimental evidence that refutes their claims then I'm not sure why we would assume your idea that goes against the scientific consensus is the "best" idea.

You have given zero sources. curi gave one: http://curi.us/272-algorithmic-animal-behavior

So the scientific evidence is 1 to 0 against you. You've been asked for scientific sources. You don't seem to actually know any.

Posted to more stack exchanges:

https://pets.stackexchange.com/questions/26591/non-robotic-animal-behaviors

https://philosophy.stackexchange.com/questions/68557/non-robotic-animal-behaviors

On the philosophy one I got a guy harassing me and a guy who gave a bad non-answer (I asked for written sources):

Hypnosifl:

>> Animals can obviously learn things beyond what's in their genes, like if you have a dog and it learns its way around your house it wasn't genetically pre-programmed with knowledge of the layout of your house. And even relatively simple software programs like neural networks can learn things that weren't pre-programmed into them. If you're asking what capabilities animals have that disprove the idea that their brains are something like complicated and fine-tuned versions of neural networks, I would ask what evidence you have that the human brain is anything more than that.

curi:

> Asserting that your conclusions are obvious is not how science works. And your example is trivially wrong. Storing map data is something basic algorithms can do with no intelligence or learning, just mechanically following a mathematical algorithm. If a dog simply mechanically does some code from its genes, just like a calculator or self-driving car follows its programming, that isn't learning anything new.

https://www.reddit.com/r/DebateAVegan/comments/dzbzbt/animal_behaviors_that_couldnt_be_robotic/f870cn8/

You'd have to learn Critical Rationalist epistemology for the full reasoning (which, yes, controversial). A short answer is that a general intelligence algorithm is unlimited, infinite, unbounded in what it can do – it allows for creativity, for new stuff – which is different than all other algorithms which are limited, finite, bounded.

Another way to look at it: it's a different number of steps in the process.

1 step:

genetic algorithm -> cow follows algorithm and eats grass

2 steps:

genetic intelligence algorithm -> intelligent thought to create new ideas, behaviors, algorithms, knowledge, etc., including about GPS and satellites -> person makes and launches satellite

My more basic point is that the distinction between general intelligence, or not, is well known and is something animal rights advocates ought to consider and address, rather than ignore (i was hoping they already had). It's e.g. a major idea in the artificial intelligence field which is trying to create AGI: artificial *general* intelligence, and sees (and has written lots about) difference between AGI and different stuff like self-driving car algorithms or the algorithm that fires a satellite's propulsion system occasionally to keep it in the right orbit.

Holy shit a guy, in all seriousness, linked me *dog telepathy* as *controlled science experiments*.

https://www.youtube.com/watch?v=9QsPWitQovM

Guy needs to learn some James Randi stuff like how his buddies fooled scientists into thinking they were telepathic. It's easy to get fooled. Ruling out a few ways you thought of that something could be accomplished, then concluding telepathy, is naive.

https://www.reddit.com/r/DebateAVegan/comments/dzbzbt/animal_behaviors_that_couldnt_be_robotic/f872q7i/

Cows don't think creatively though. Or at least, where is the evidence to merit that belief? And people don't learn by mere exposure. But as I said those were just short approximations to give you a sense of the issues, not to be a full proof.

Suffering is related to preferences or value judgments, e.g. wanting X or wanting not-Y. General intelligence is required to form ideas like those. E.g. self-driving cars don't form those sorts of ideas.

And, again, I was hoping that any animal rights advocate had worked through these issues and written it down instead of leaving us to figure it out from scratch on reddit. What is going on!? And it would be much easier for me if the animal rights case was written down so I could review it and point out where I disagree. But this whole part of the case is just a blank so there's nothing to respond to and argue with, and I'm left just trying to teach non-experts, from scratch, a huge amount of complicated material. (it's not like i expect you'll be willing to read several books and come to a serious discussion forum, you just want me to somehow take some of the best, densest books and condense them down to a few paragraphs – though let me know if you would want to do that)

By contrast, I would be happy to refer you to some sources and you could tell me the errors, but it's a lot of reading and requires a lot of background knowledge.

https://philosophy.stackexchange.com/questions/68557/non-robotic-animal-behaviors

Typical examples of people who don't know wtf they're talking about:

Cell:

> It seems to me that this question is based on shaky premises. First humans are animals so the set of all animals clearly contains animals with human-like intelligence. Second, the common ancestor of all animals goes back very far when the brain emerged so the brains or our closest relatives aren't that radically different such that one has the ability to learn and one doesn't. And third that's not how genes work, like at all. Genes aren't comparable to programming code or "knowledge".

Conifold:

> There is no behavior incompatible with humans-as-robots "model". Universal Turing machines are called universal for a reason, they can, in principle, emulate any given behavior. When they are a good model is another question entirely. And generally, you should not conclude too much from responses on a website.

By contrast, here's someone who does know wtf they're talking about (but who isn't responding to me):

John Forkosh:

> @Conifold Re your "emulate any given behavior" That should be, "emulate any given >>computable<< behavior". So, just using the textbook halting function example: no computer can "behave" such that it answers yes/no to "Does this program halt?" for every possible program. But, given more time than I'll be alive, and given that I'm more than a pretty decent programmer, maybe I could give all the correct answers. Or maybe I couldn't. But, supposing I could, then that would represent behavior beyond which any computer is capable. And then they wouldn't be (quoting you) "a good model" .

---

Overall, still no one giving any literature that addresses my questions. I still haven't really gotten a single important thing to add to my discussion tree diagram.

Clever Crows!?

Was linked to this:

https://www.youtube.com/watch?v=ZerUbHmuY04

Shows that they kept repeatedly feeding a crow for dropping stuff in water. Their conclusion: crows understand what they're doing practically like humans! It's understanding causality about how water levels work!

You see the first one and it sorta looks like the crow was maybe clever but they've been repeatedly trying to get the crow to do it. They keep putting work into it. This isn't just what crows can figure out, it's what people can get crows to do. The next video on autoplay was:

https://www.youtube.com/watch?v=cbSu2PXOTOc

They are like: omg how will the crow solve the puzzle with multiple difference things involved?

Then they mention: the crow has already been given food rewards to train it to do each part individually.

So they are just doing a bunch of animal training behind the scenes to get crows to do a few things. crows will repeat actions they get food from and can be gotten to randomly do some stuff occasionally to give you the opportunity to reward it for doing that.